Visionic designs off-the-shelf optical guidance and control solutions for complex industrial manufacturing processes. As an integrator, they aim for constant improvement of industrial performance.

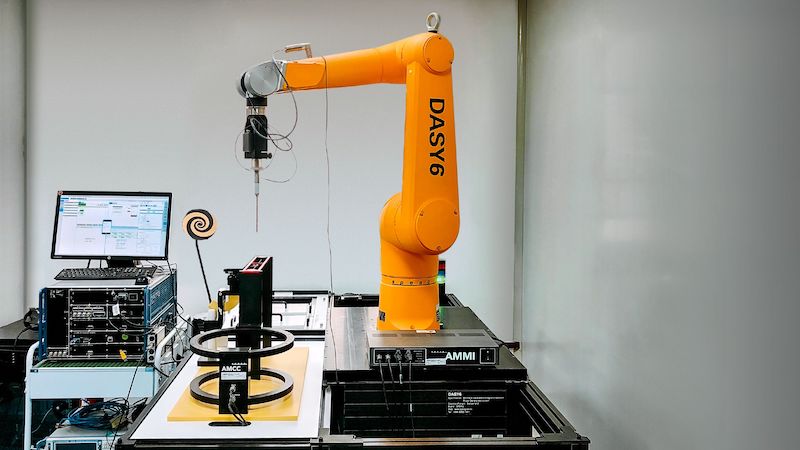

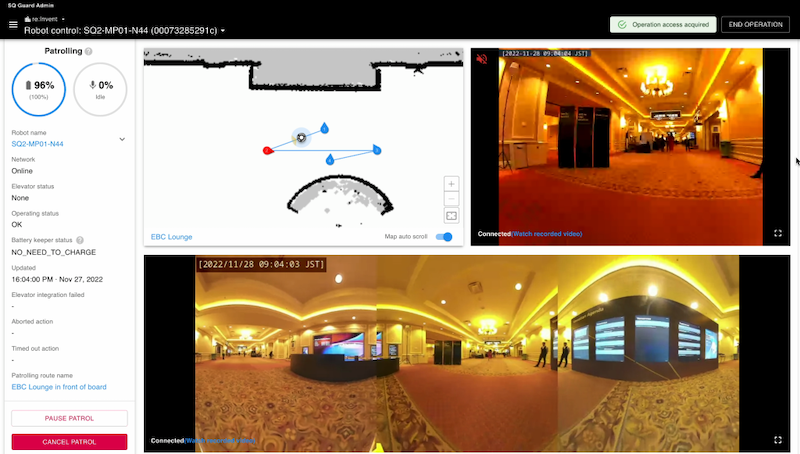

Fuzzy Logic Robotics, with its no-code software that allows non-experts to create, simulate and control a robot cell in real time, aims to democratize industrial robotics.

Together, Fuzzy Logic and Visionic are removing the technological and financial obstacles to the robotization of applications that were previously unheard of, or perceived as impossible, such as pressure cleaning and the decontamination of engine parts in the aeronautics industry. [Read more…] about Fuzzy Logic Robotics and Visionic partner to automate cleaning of complex parts in aeronautics