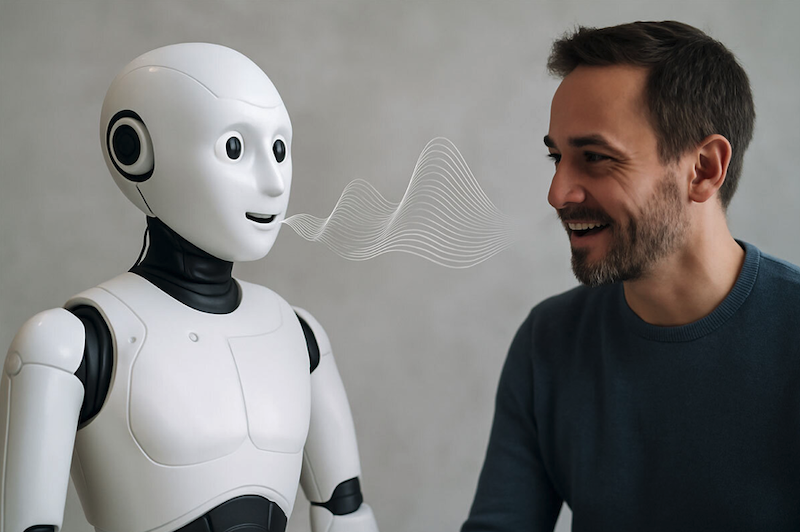

Richtech Robotics, a US-based provider of AI-driven robots operating in commercial and industrial environments, has announced a hands-on collaboration with Microsoft through the Microsoft AI Co-Innovation Labs to jointly develop and deploy agentic artificial intelligence capabilities in real-world robotic systems.

Through close collaboration between Richtech Robotics’ engineering team and Microsoft’s AI Co-Innovation Labs, the companies worked together to enhance Richtech Robotics’ Adam robot with adaptive intelligence powered by Azure AI.

The collaboration focused on applying vision, voice, and autonomous reasoning to physical environments, enabling robots to move beyond task execution and support more contextual, conversational, and operationally aware interactions. [Read more…] about Richtech Robotics partners with Microsoft to advance agentic AI in real-world robotics applications