The big AMR fleet computing debate: Distributed vs centralized

By Limor Schweitzer, CTO and founder, MOV.AI

A shift in mobile robot computing needs

Over the past year, we have seen the growing adoption of centralized computing in Autonomous Mobile Robot (AMR) fleets. In this article, we ask if this trend is the best approach, especially in advanced deployments with a large number of AMRs and dynamic, non-sterile environments.

Past generations of AMRs, or more accurately Automated Guided Vehicles (AGVs), operated in sterile environments, with physically marked fixed routes and simple, predefined tasks. The computing requirements were almost nonexistent.

Current day AMRs are different.

In the past few years, thanks to increased CPU power and reduced cost of 360-degree LIDARs, localization algorithms have matured and become less sensitive to dynamic surroundings.

This, in turn, led to the successful deployment of mobile robots in new industries where chaos prevails: random boxes, garbage, and pallets surround the robot trajectories, hundreds of humans and vehicles run around the same paths as the robots.

Advanced AMR implementations include a large number of autonomous robots operating in dynamic, non-sterile environments. AMR fleets are expected to offer not just efficiency, but also flexibility and agility.

They are expected to be able to:

- effectively distribute intra-logistics tasks, picking, dropping and moving around their payloads;

- find optimal, dynamically changing paths while avoiding congestion;

- charge themselves in an optimal opportunistic way considering peak requirements for intra-logistics tasks; and

- be cost-effective compared to human labor.

All this requires significant processing power. In the next sections, we will look at the typical decision-making performed by mobile robots and AMR fleets, and the pros and cons of centralized and distributed computing when handling these processes.

AMR computing requirements

There is a specific set of decisions that are necessary for AMR fleets, these can be made via either a centralized or a distributed computing system:

Robot-level decisions

Safety – AMR safety is a complex and partly-regulated regime, dealing with different scenarios that may pose a risk to humans. To mitigate such risks, safety frameworks suggest certain hardware that is certified to meet certain performance levels (PL-C, PC-D, etc.) that in turn guarantee that the safety mechanisms function properly even in adverse conditions such as fire and electric malfunctions.

In that sense, the safety hardware in an AMR is not dependent on the software that handles localization and AI. It is an independent subsystem that provides highly reliable performance to guarantee that the vehicle does not injure people. A safety subsystem resides on the robot itself and reports to the OS and high-level software; however, the high-level software usually can not interfere with the safety subsystem.

Localization – Advanced SLAM technologies use input from sensors and cameras and image processing to identify the robot’s location. This action requires both a significant amount of data acquisition and a significant processing resource and at a very high frequency (almost continuous).

Side note – there are a few indoor-GPS systems based on UWB and cameras, which provide an external source of positional information to the robots. These, however, have not gained much popularity, and self-localization is the prevailing method of choice for AMRs.

Path planning – Here too, the goal of advanced AMRs is not to use a standard, predefined route, but to calculate the optimal route between the robot’s current location and its destination. The optimal route may change along the way, depending on newly identified obstacles or accumulating congestion. In addition, the planned turn radius for taking a corner safely may depend on the load size, and safety distances from people and equipment may be extended to account for heavy loads.

Dynamic path planning is performed using data generated locally by the robot and external data sources. The amount of data and required processing power are not very high, but the frequency is.

Risk prevention – An intelligent layer of protection that augments component-level safety. Risk prevention adjusts robot behavior, taking into consideration specific AMR, load, and environmental parameters to ensure smooth and safe operation and add an extra layer of protection to people, equipment, and goods. Examples include obstacle avoidance, turn ratio calculation and speed adjustments. Risk prevention decisions are taken on a local level, based on specific, constantly changing parameters.

Fleet-level decisions

Task allocation and prioritization – This is another decision that is different and more complex in advanced AMR implementations. Unlike with AGVs or basic implementations, tasks are not always predefined in advance in the automation process; they may also be generated dynamically by a human or input from other systems and devices. For example, sending a cleaning robot to clear up a spill, going to the charging station or picking up a loaded trolley.

Task allocation and prioritization can also be interdependent. For example, the decision as to whether an AMR should go to the charging station or perform a task depends on the exact battery level, and the power required to perform the task.

The relevant information is derived from numerous data sources. For example, external cameras can indicate traffic, the location of available AMRs is provided by the AMRs, and task priority by the Task Manager. The amount of data, processing power required and frequency are not very high.

Task allocation is a non-trivial mathematical optimization problem that optimizes fleet success KPIs for a given set of static and dynamic constraints

Conflict resolution – This is a new type of decision which results from dynamic rather than predetermined robot behavior. Conflicts can occur when two robots cross paths or plan on using the same route at the same time. The amount of data, processing power required and the frequency of conflict resolution are not low. However, decision speed is essential to avoid idle wait time.

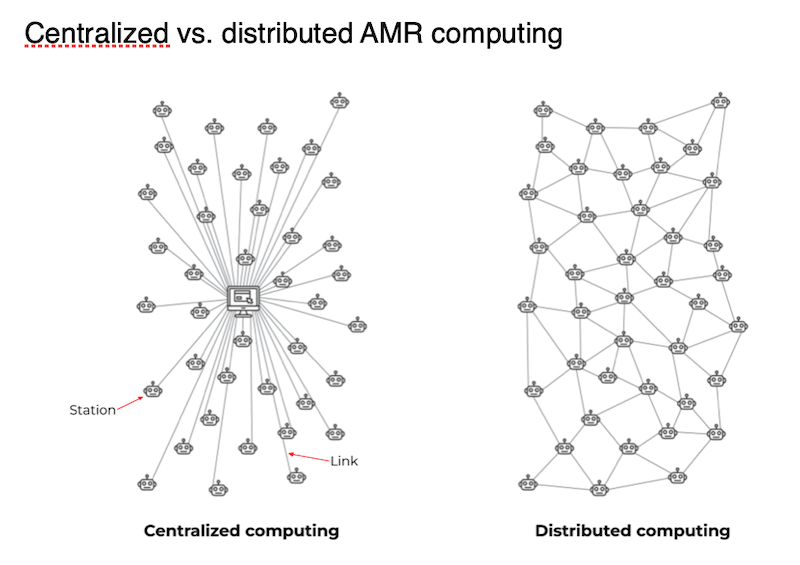

Centralized computing: All AMRs are connected to a central computer (on-prem or cloud-based) that performs all analysis and decisions and transmits them to individual robots. In case of issues or events, the robot will cease all activity until new instructions are received.

Distributed computing: Each AMR receives all available data, performs independent analysis and decision making, and updates the network with the new data.

Pros and cons of a centralized AMR fleet software architecture

Pros

- The main benefit of a centralized (local or cloud-based) architecture is that there is a single entity optimizing the fleet behavior, theoretically leading to optimal results. Given infinite resources, this may be the case. However, resource consumption is one of the main cons of a centralized architecture.

- Another benefit is the ability to leverage the central computer to synchronize between different robot types and different data sources, an approach promoted by VDA505.

- Cloud-based option.

Cons

- The drawback of a centralized architecture is infrastructure requirements, namely connectivity and processing power. Most warehouses or manufacturing centers are fully covered by WiFi, with 5G starting to appear as well. WiFi capacity is limited and is also shared with a growing number of IoT devices. Processing power can be cloud-based, yet the same issues apply: cost and networking capacity.

- Since the centralized model is dependent on network connectivity, coverage is another matter, with dead zones and low coverage areas. Lack of coverage in case an AMR runs into a spill will result in it getting “stuck”, requiring on-premise human intervention.

The processing power required to control and operate an AMR fleet depends on the number of AMRs and the type of processes they run. As noted, advanced path planning for dynamic “dirty” environments requires significant processing and frequent information transfer.

In a deployment with dozens or hundreds of AMRs, this amounts easily to a dedicated data center with the related air conditioning and investment in high-availability technology to avoid a single point of failure.

It is worth noting that even in a centralized approach, mapping and localization are performed locally on the AMR due to the high data requirements and time sensitivity. While there are voices who suggest that in the future, when bandwidth and latency allow, they may be performed centrally, we do not consider this a viable option.

Pros and cons of distributed AMR fleet software architecture

Pros

- The main benefit of a distributed AMR fleet from a computing point of view is resource optimization. As decisions can be taken locally – using the processor installed to support the SLAM algorithm – there is no need for a large central data center. You already have a distributed data center running around in your facility.

- What’s more, since AMR can perform analysis locally, it can be performed more frequently allowing support of more advanced path planning. The AMR’s ability to make decisions locally also lowers its sensitivity to connectivity issues, allowing it to continue to operate seamlessly even on low or now coverage areas.

- Distributed networks are also easily scalable and more robust, as there is no single point of failure.

Cons

- The main drawback of a distributed computing architecture is its complexity. It requires knowledge of parallel processing and distributed databases.

- Another drawback is the possibility of conflict and the lack of a central authority. What happens if two different AMRs decide to perform the same task?

It’s not all black and white

The drawbacks of both central and distributed architectures are known to vendors who take steps towards mitigating some of these issues. Some central computing implementations allow robots a certain level of autonomy – for example, within the corridor the computer performs the path planning.

In addition, some tasks such as monitoring, analytics and alerts, or remediation in cases of conflict, are best performed centrally, even in a distributed implementation.

Advanced AMR deployments require hybrid computing

For most aspects of advanced, dynamic AMR implementations there is no other alternative to distributed computing. The large amount of processing power, the number of requests per second, and the network requirements imply prohibitive costs.

As a result, centralized AMR deployments place a cap on the recalculation frequency. This places an artificial handicap on AMR capabilities.

However, some functionalities such as monitoring, analytics, alerts, or remediation in cases of conflict are best performed by centralized computing.

Rapid deployment of advanced AMRs in dynamic, brownfield sites that take into consideration the way humans operate, requires hybrid computing, which combines the best of central and distributed computing.

About the author: Limor Schweitzer is the CTO and founder of MOV.AI and a serial entrepreneur in the field of robotics. MOV.AI’s ROS-based Robotics Engine Platform provides AMR manufacturers and automation integrators everything needed to easily develop enterprise-grade robots. It includes a ROS-based IDE, off-the-shelf autonomous navigation algorithms, deployment tools, and operational tools such as fleet management, flexible interfaces with warehouse environments such as ERP and WMS, cyber-security, and APIs.