Nvidia unveils new reinforcement learning research at ICRA 2019

Nvidia researchers from the newly opened robotics research lab in Seattle, Washington have presented a new proof of concept reinforcement learning approach that aims to enhance how robots trained in simulation will perform in the real world. (See video below.)

The work was presented at the recent International Conference on Robotics and Automation (ICRA) in Montreal, Canada.

The research is part of a growing trend in the deep learning and robotics community that relies on simulation for training.

Since the method is virtual, there’s no risk of damage or injury, allowing the robot to train for potentially an unlimited number of times, before deploying to the real world.

One way to describe simulation training is to compare it to how astronauts train on earth for key space missions.

They learn to withstand the punishing G-forces that come with space travel, rehearse and practice all aspects of a mission, and how to perform critical operations so they can per form them flawlessly in space.

Reinforcement learning in simulation aims to do the same but with robots.

Ankur Handa, one of the lead researchers on the project, says: “In robotics, you generally want to train things in simulation because you can cover a wide spectrum of scenarios that are difficult to get data for in the real world.

“The idea behind this work is to train the robot to do something in the simulator that would be tedious, and time-consuming in real life.”

Handa says that one of the challenges researchers in the reinforcement learning robotics community face is the discrepancy between what is in the real world and simulator.

“Due to the imprecise simulation models and lack of high fidelity replication of real-world scenes, policies learned in simulations often cannot be directly applied on real-world systems, a phenomenon that is also known as the reality gap,” the researchers state in their paper.

“In this work, we focus on closing the reality gap by learning policies on distributions of simulated scenarios that are optimized for a better policy transfer.”

“Rather than manually tuning the randomization of simulations, we adapt the simulation parameter distribution using a few real world roll-outs interleaved with policy training,” Handa says. “We’re essentially creating a replica of the real world in the simulator.”

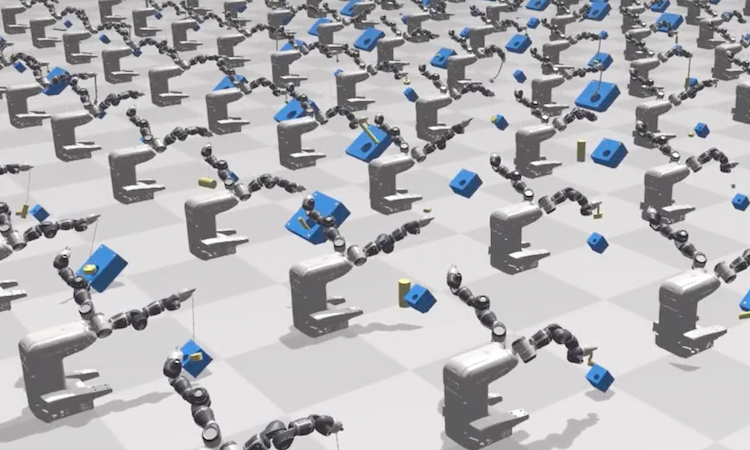

Using a cluster of 64 Nvidia Tesla V100 GPUs, with the cuDNN-accelerated TensorFlow deep learning framework, the researchers trained a robot to perform two tasks: placing a peg in a hole and opening a drawer.

For the simulation, the team used the Nvidia FleX physics engine to simulate and develop the SimOpt algorithm described in this research work.

For both tasks, the robot learns from over 9600 simulations each over around 1.5-2 hours, allowing it to swing a peg into a hole and open a drawer accurately.

“Closing the simulation to reality transfer loop is an important component for a robust transfer of robotic policies,” the researchers say.

“In this work, we demonstrated that adapting simulation randomization using real world data can help in learning simulation parameter distributions that are particularly suited for a successful policy transfer without the need for exact replication of the real world environment.”