Microsoft Research has announced Rho-alpha, a new robotics model designed to help robots understand natural language instructions and carry out complex physical tasks in less structured environments.

The model, derived from Microsoft’s Phi series of vision-language models, is being made available through the company’s Research Early Access Program. According to Microsoft, Rho-alpha is intended to advance a new generation of robotics systems capable of perceiving, reasoning, and acting in dynamic real-world settings.

For decades, robots have performed best in tightly controlled environments such as factories and warehouses, where tasks are predictable and carefully scripted. Recent advances in agentic AI, however, are enabling new “vision-language-action” models that allow physical systems to operate with greater autonomy.

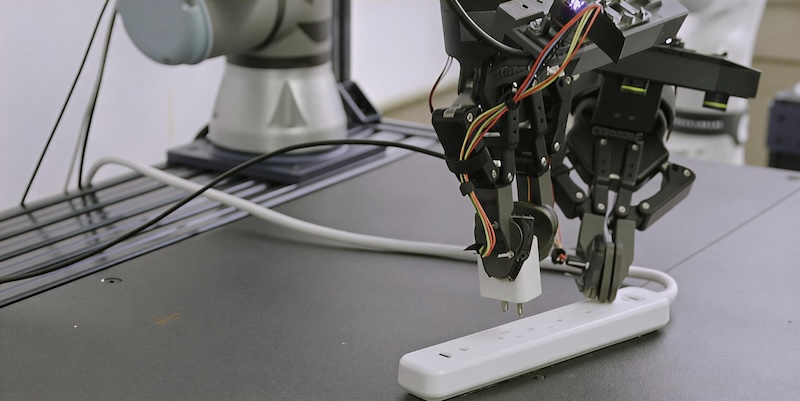

Rho-alpha belongs to this category, translating natural language commands into control signals for robotic systems performing bimanual manipulation tasks. Microsoft describes it as a “VLA+” model because it extends beyond traditional vision and language inputs by incorporating additional sensing modalities.

One of those additions is tactile sensing. Microsoft Research said Rho-alpha integrates touch data, with ongoing work to support other modalities such as force sensing. The company also said the model is designed to improve over time during deployment by learning from feedback provided by people interacting with the robot.

Training the model relies heavily on synthetic data. Microsoft Research developed a multistage training pipeline that uses reinforcement learning and simulation, built on Nvidia’s Isaac Sim framework, to generate large volumes of training data without requiring extensive real-world teleoperation.

The scarcity of diverse real-world robotics data remains a major challenge for foundation models, according to researchers involved in the project.

Professor Abhishek Gupta, assistant professor at the University of Washington, says: “While generating training data by teleoperating robotic systems has become a standard practice, there are many settings where teleoperation is impractical or impossible.

“We are working with Microsoft Research to enrich pre-training datasets collected from physical robots with diverse synthetic demonstrations using a combination of simulation and reinforcement learning.”

Nvidia, which collaborated with Microsoft Research on the simulation infrastructure, highlighted the role of synthetic data in accelerating robotics development.

Deepu Talla, vice president of robotics and edge AI at Nvidia, says: “Training foundation models that can reason and act requires overcoming the scarcity of diverse, real-world data.

“By leveraging Nvidia Isaac Sim on Azure to generate physically accurate high-fidelity synthetic datasets, Microsoft Research is accelerating the development of versatile models like Rho-Alpha that can master complex manipulation tasks.”

Microsoft has opened signups for the Rho-alpha Research Early Access Program and said further updates on its robotics research efforts are expected in the coming months.