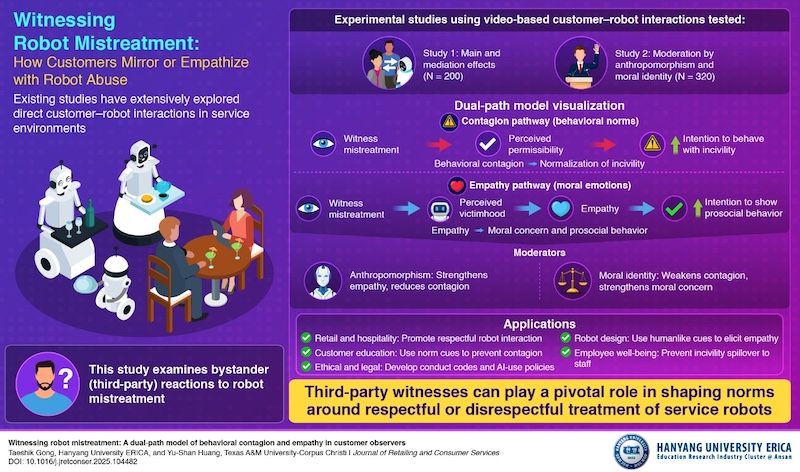

A new study from Hanyang University ERICA, in South Korea, has found that when people witness someone abusing a service robot, their response follows one of two psychological paths: some copy the aggressor’s behavior, while others feel empathy for the robot and act more kindly.

The research, led by Professor Taeshik Gong, offers one of the first empirical looks at a phenomenon that many people have noticed but few have studied – the discomfort that arises when robots are kicked, shoved, or insulted in public or online videos.

Such scenes, once intended to showcase a robot’s balance or durability, increasingly strike observers as needlessly cruel.

Gong’s team found that bystanders’ reactions depend largely on two factors: how humanlike the robot appears, and the observer’s own moral identity.

When a robot is designed with expressive eyes, an emotive voice, or gestures that suggest personality, people are more likely to empathize and less likely to imitate mistreatment.

Conversely, when robots appear purely mechanical, some observers may mirror the aggressor’s hostility – a form of behavioral contagion that normalizes incivility.

These findings are expected to revolutionize diverse fields. “An application of our research is in hotels, restaurants, airports, and retail stores, where robots increasingly serve customers,” explains Prof Gong.

“Managers can design training protocols for employees and visible norm cues such as signage, scripted responses, and pre-recorded reminders to discourage customer mistreatment of robots. This helps prevent a contagion of incivility among bystanders and promotes a respectful service climate.”

Prof. Gong adds: “Even though the study focuses on customers, the findings matter for employees too. If the mistreatment of robots is normalized, this may spill over into how customers treat human staff.

“Conversely, reinforcing prosocial norms toward robots can strengthen the overall moral climate of the service environment, benefiting employees’ dignity and customer satisfaction simultaneously. Within a decade, we may see relevant codes of conduct, workplace guidelines, or even legal provisions.”

The study suggests that robot designers and service managers can play a crucial role in shaping public norms. Humanlike design cues not only make robots more approachable – they also act as moral signals, reminding people that even nonhuman entities deserve a measure of respect.

Published in the Journal of Retailing and Consumer Services, the research underscores a growing ethical debate in robotics and AI: as intelligent machines become part of daily life, how society treats them may reflect – and influence – how we treat one another.