Billion dollar brain: Exclusive interview with Professor Alois Knoll

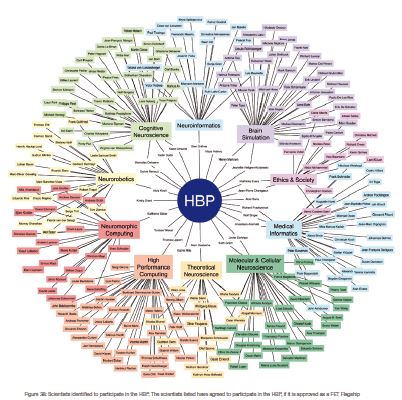

Professor Alois Knoll, co-ordinator of the European Clearing House for Open Robotics Development (Echord), and one of the key scientists involved in the $1.5 billion-dollar Human Brain Project, speaks exclusively to Robotics and Automation News

It’s not every day you learn a new word you like. From my point of view, having been in journalism longer than I’d like to recall, it’s an interesting experience to be reminded of an extract from a biography of Dr Samuel Johnson, “father of the English dictionary”, written by James Boswell in 1791, which I read in my teens.

Nothing specific from what I read applies here, but I’ll paraphrase a quote from Johnson which I think may be most appropriate. “A writer only begins an article. A reader finishes it.”

It’s not exactly what Johnson said, but then how do I know he even said it? Anyway, I’ve changed the word “book” to “article”. I remember doing something similar in my teens, for my English exam. I took the first few words, and then added my own. It was an abridged version of Boswell’s book, and I was reading it in Reader’s Digest magazine, so it may not have been the exact same words as the original.

I learned a lot from Reader’s Digest. Can’t really say what, because I don’t know specifically. But I think I learned something about writing. Mainly that some people enjoy reading. Maybe. Or maybe I plagiarised an entire article almost word for word for an English exam.

No man was ever great by imitation

I’ve always been interested in words, being born in Bangladesh, a country borne out of a war to preserve the people’s language, arriving in England in 1972 as an immigrant, and having to learn a new language. I still have this sense that English is a language that comes from my brain because I acquired it consciously, whereas Bangla was the language I heard from before I was born and then during the first few years when I was still in Bangladesh, and as such, when I speak it even now, I feel like it comes from a deeper place. Perhaps it’s the difference between acquiring information and having knowledge.

Having said that, I can only speak my language; the standard of my writing is that of primary school, which I attended briefly in Bangladesh. Most of my life has been spent in England, and I’ve always been interested in words, gradually extending my vocabulary over the years, and never really tiring of learning new ones.

And so it was that recently I learned a new word I liked the sound of. It might not be something that appeals to a lot of other people, but it’s “mechatronics”. I thought I’d heard the word before, although I wasn’t sure. But I’d never heard it in a more apt conversation or context.

The true measure of a man is how he treats someone who can do him absolutely no good

I was interviewing Professor Alois Knoll, arguably one of the most influential roboticists in Europe. He was speaking about a wide range of subjects to do with robotics, and in the middle of a sentence he said “mechatronics” and I’ve since bought a domain name as a direct result. MechatronicsNews.com. It might not even get off the ground, but I’m glad I own it.

Professor Knoll is currently co-ordinator of the European Clearing House for Open Robotics Development (Echord), which he conceptualised and brought into being through his discussions with the European Commission starting in 2007.

His argument to the Commission at the time was that European robotics research needed a “unique, new approach, one which involved major interaction between academia and industry”.

At various intervals in his conversation with me, his voice seem to trail off when he compared Europe to the US. My sense was that he laments what he sees as Europe’s relative lack of enthusiasm for robotics, and points out that the US is investing heavily in the sector. I wasn’t sure that’s what he meant, but that’s what I thought. But then, my sensor readings have always been more than slightly off.

The reality, as I see it, is that European robotics is in reasonably good shape. Germany is one of the leading robotics-oriented nations on Earth. The International Federation of Robotics places it third, after South Korea and Japan, on a list of countries with the most number of industrial robots for every 10,000 people employed in manufacturing, also known as robot density.

Italy, Sweden, Denmark, Spain, and Finland are also in the top 10, which basically makes it a slightly Europe-dominated list. So, as I say, my sensors probably need fixing or replacing, and perhaps Professor Knoll doesn’t think the Americans are different or ahead of the Europeans.

In terms of money invested, maybe the US is more committed, but Europe does have tremendous intellectual resources, which may make up for the investment gap.

What is easy is seldom excellent

Professor Knoll’s own research and development project, Echord, is funded by the European Union and based in Germany, and connected to the Technische Universität München, or Technical University of Munich. Echord++ is a separate follow-up project with a much wider approach. Knoll is co-ordinator of both Echord and Echord++.

When I was arranging the appointment to speak to Knoll, I suggested times when I would be less busy, and one particular week when I would be almost unavailable, and would prefer not to conduct the interview during that week. But I knew Knoll was a busy man, so when it turned out that the only time he would be available would be when I was most busy and would have to make the phone call from a noisy place, I rearranged my schedule.

Not only is Professor Knoll the the co-ordinator of Echord, he is also one of an elite group of scientists who are at the centre of the Human Brain Project, the billion-dollar, decade-long research endeavour established in 2013 with funding from the EU.

Having been in the field most of his life, Professor Knoll is widely known in European robotics. Indeed he has been instrumental in building some of the fundamental components of the intellectual infrastructure of European robotics of today.

I started by asking him about his current areas of interest and research.

Professor Knoll: “We’ve been researching many areas, but there’s one invariant, which is human robot interaction, multimodality and closed loop, or closing the loop between the robot environment and human behaviour. The human robot interaction is the basic theme that I always come back to.

“I was the first chair of the international conference on intelligent systems and robotics [First IEEE/RAS Conference on Humanoid Robots]. As part of my role as chair, I oversaw the counting of the keywords in the scientific papers on the subject. This is done on a regular basis by every programme chair.

“And the most interesting, most frequently mentioned, most attractive topic seems to be at the moment human robot interaction, and it’s on the sensors side.

“It’s interesting to see the contrast between sensor research, which is of course very challenging, and mechatronics.”

No man can taste the fruits of autumn while he is delighting his scent with the flowers of spring

Knoll – the “K” is pronounced, not silent – says scientists seem more attracted to undertaking sensor research than attempting to overcome the challenges posed by mechatronics. He would like to see more of a balance.

“Well, there are quite a few problems in mechatronics, if you think about, for example, the five-finger, four-finger, three-finger hands, which are largely unsolved, I mean there are hands available, and they are very nice, but they are expensive, and they’re certainly not for mass use.

“People, like myself, seem to be a lot more interested in sensors, artificial intelligence, data processing, sensor information fusion, and things like that.

“So, for the advancement of the whole field, I think it would be beneficial if more people – unlike myself – were more interested in mechatronics, and control of bodies and things like that.”

He adds that this is only his hope, but he does seem to be using his words to encourage people towards mechatronics.

“I would like to see that. I’ve been in sensors for all my career. I mean, I can only say that. I will certainly not go into designing robot bodies now, although we have done some work in that field, and I think quite interesting work. So that’s at the moment more like hype, if you will. But that’s probably because the development of the hardware in terms of the mechanics is rather slow. Whereas development in terms of cameras, cheap sensors, and things you can reuse from smartphones – very cheap but very high quality – is something that fascinates people.

“And the same goes for AI, where we had a number of phases, starting from this euphoria in the 1980s with expert systems going through the AI winter, and now deep learning. But that’s only one aspect. This period sees another revival, and I think for good reason. The results that we see now are quite impressive, something we could not have seen 10 years ago.

“I think that’s a very interesting development, and being in robotics is certainly a very interesting place, as a researcher but also increasingly as a businessman, although it has to be clear that it’s hard work, it’s difficult, and this is not like the internet economy, so the growth rates will remain moderate but stable over the years. And that’s maybe better than being in a field of the economy where you have growth rates of 50 or 100 per cent only for a couple of years and then it’s over.”

Self-confidence is the first requisite to great undertakings

Knoll’s reluctance to engage in hyperbole robs me of many bombastic sentences so beloved of downmarket muckrakers like myself. Any hope I had of a formulaic write-up went out the window. Besides, I’ve been observing the robotics sector for about a year now, as a non-expert albeit. But from my journalistic view, however limited, my understanding is that this past year has seen an acceleration in developments in many fields related to robotics; certainly if you were to judge the sector by how many news stories have recently been appearing in the media about the subject. So I ask Knoll, does he not agree that there is currently growth in robotics and that the growth is accelerated?

“We will be in continuous growth,” says Knoll. “The growth rates may even increase. But there will be no sharp drop, like let’s say like the internet [bubble], where you have rapid development with growth rates of 100 per cent a year but then there’s only one or two companies left that end up conquering. This is not what robotics will be like. There will be a large number of vendors with very specialised solutions. They will excel more and more because there’s more and more need. This will be a positive feedback cycle. But don’t expect growth rates of 50 or a hundred per cent in robotics – that’s just not doable.

“It’s certainly true that robotics has been growing at a more accelerated rate now than it has been in recent years. But I’m a bit cautious to raise expectations that this is the next big thing and that the next Google is just around the corner in robotics. I don’t think that’s going to happen, simply because this is very complex, it’s a combination of software and hardware, you need to master a lot of fields to be successful in this area, and there’s not too many people who can actually do that. So that will limit the growth.

“But of course the need – the demand – is obvious. For example, the assistance systems we have for autonomous cars, which is also a specialised type of robot. So the potential of the sector is huge, but it’s not an easy sector to navigate.”

Integrity without knowledge is weak and useless, and knowledge without integrity is dangerous and dreadful

I accept his point that growth will be steady, and maybe there has been a recent spike in media interest, particularly around the greater amounts of money being invested in new developments in areas such as artificial intelligence, machine learning, deep learning, robots that can cook and so on. However, there must be some trends in robotics which will lead to dramatic growth in some sectors, or at least be more rewarding than others, both from a research or developmental perspective as well as from a commercial point of view?

Knoll says: “In principle, I see one trend. There won’t be a ‘Big Bang’, and then there will be a universal robot that everybody can use for every purpose. It’s very unlikely that we will see a humanoid robot that everybody can use like a slave as in the Middle Ages.

“What is likely is that the devices and appliances we have at home and in factories will become more and more intelligent. So they will be equipped with more sensors, they will be equipped with more computing power, and people will learn how to use and how to programme them.

“Here of course is one of the decisive factors: the interface between the human and the robot. That’s one thing that’s important. But there’s also another interface, and that is the interface between the system on the robot and the environment – that will also be very important to master.

“Making a robot navigate within a room is something that we have learned how to do, but if the room is difficult to describe or if it’s a completely new building, or something like that, then it’s still quite difficult. So equipping the robot with basic skills is not trivial. But nevertheless, this will happen over time. Over the next couple of years we will certainly see a couple of interesting developments.”

Perhaps I let myself down here in that I should have pressed him on what those developments might be. But I had so many questions to ask him that I got muddled into asking him about the most sensationalist ones, maybe to try again to encourage him to say something grandiose. I mentioned deep learning, of the type demonstrated by Google’s DeepMind, which reportedly beat an expert human grandmaster at the ancient Chinese game of Go, a feat which is supposed to have been previously beyond the capabilities of computers. Is deep learning, or machine learning, not the answer to the problem of robots not knowing how to navigate unfamiliar surroundings?

Knoll says: “You have to make a distinction between a bodied intelligence and a disembodied intelligence. A disembodied intelligence is a computer sitting somewhere. You put in a big chunk of data, the computer adapts to it by machine learning, and then outputs another chunk of data, which is presented to you.

“But here we are talking about a computer or an artificial intelligence built into a body, which is a totally different ball game because what you expect from a bodied intelligence is that it reacts to changes in the environment, it reacts to users’ instructions, and it can develop some independence in moving around, in doing something, in assembling something, in being in an assistance role for hospital, and so on – and that is much, much more difficult.

“A machine or helper that can only help you play Go is probably not something on which you’d spend much money.”

The future is purchased by the present

I wasn’t sure I understood the problem the professor was describing. My brain started fumbling around for words to explain what I understood and what I wanted to ask. I thought neural networks and all sorts of massive parallel computing platforms, the cloud, or whatever, had theoretically solved the problem of processing large amounts of data. Can that data processing power not help the robot navigate any surroundings, no matter how unfamiliar?

“There has to be a piece of hardware, a robot if you will, that can use a big computer, whether it’s built into it or if it’s connected by WiFi – that doesn’t really matter. The important point is that we have a body, a piece of hardware, with wheels or legs or arms, that perceives its environment and has to respond in realtime to changes in this environment.”

So what you’re saying is that it’s a problem with the mechanics?

“It’s a problem of realtime capabilities of these algorithms, which are not normally there. For example, when you play Go, where the computer takes half a minute or a minute to make a move.”

I thought it was realtime. Or at least pretty fast.

“No. Typically, when you do things at big data centres, you do it in batch mode. And of course you could argue that Google is more or less realtime. But when it’s a very complicated structure, when you try to solve really complex problems with these computers, they can take hours and days to solve, and this is not realtime.

“So, realtime is one thing, but reacting in a sensible way to changes in the environment is another thing, and that is by no means solved to a satisfactory degree.”

I suppose the amount of data requiring processing depends on how sophisticated the machine has to be. If the benchmark is human-like responsiveness, then even I know that this will require gargantuan amount of data processing, as well as very high levels of mechanical manoeuvrability. I started fumbling again. I was at once trying to understand the problem the professor was describing, and I was trying to encapsulate what I thought I may have understood into a line of inquiry. But I got a bit stuck and it made the professor laugh. I knew at some point I would start revealing the limitations of my knowledge, and this was that moment. I covered up by waffling on about how it’s a question on a lot of people’s minds: “How long before the machines are as responsive to their surroundings and as capable of navigating their environments as humans?” I felt I nailed it. I finally uttered some words in a logical order, forming a recognisable question.

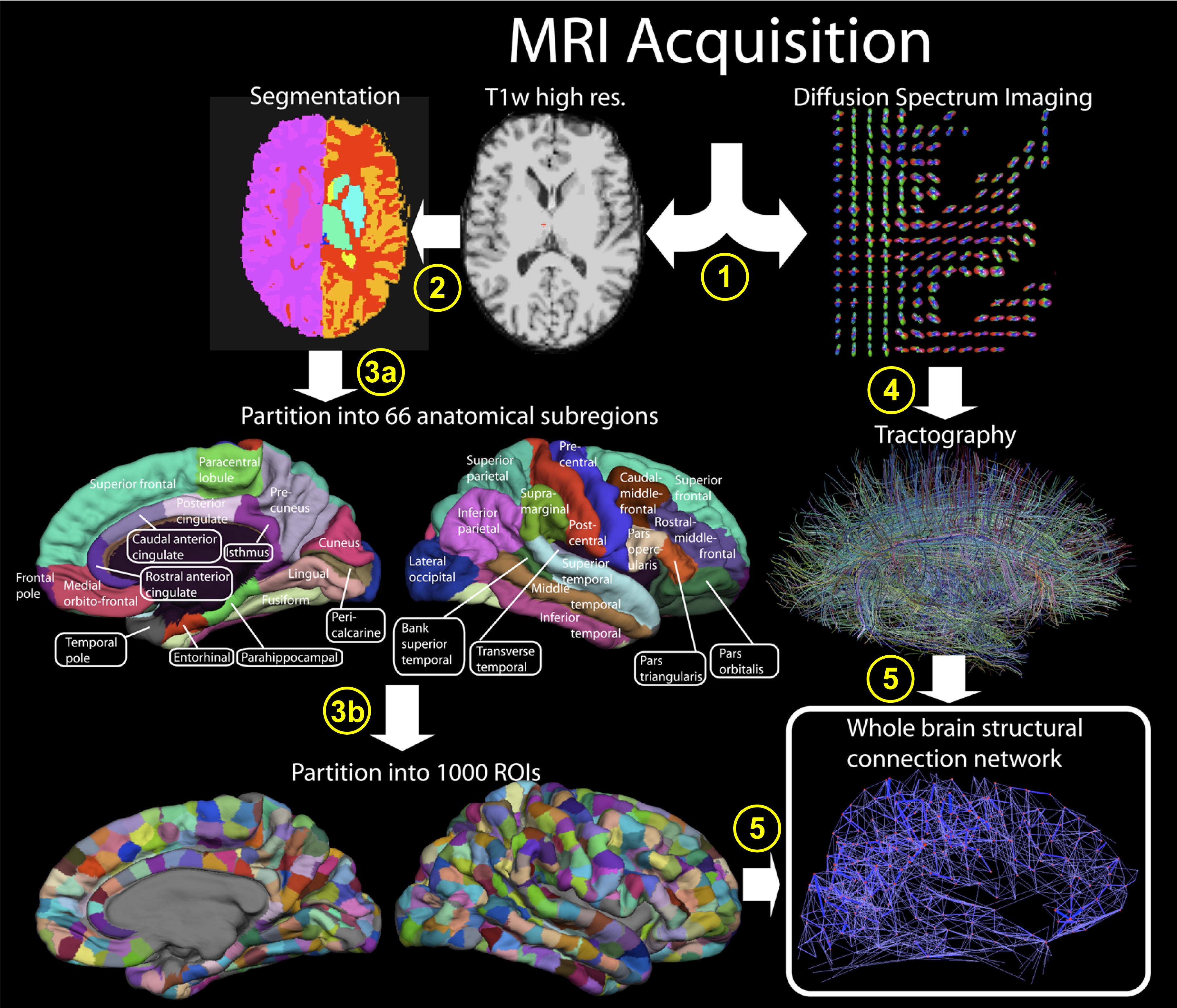

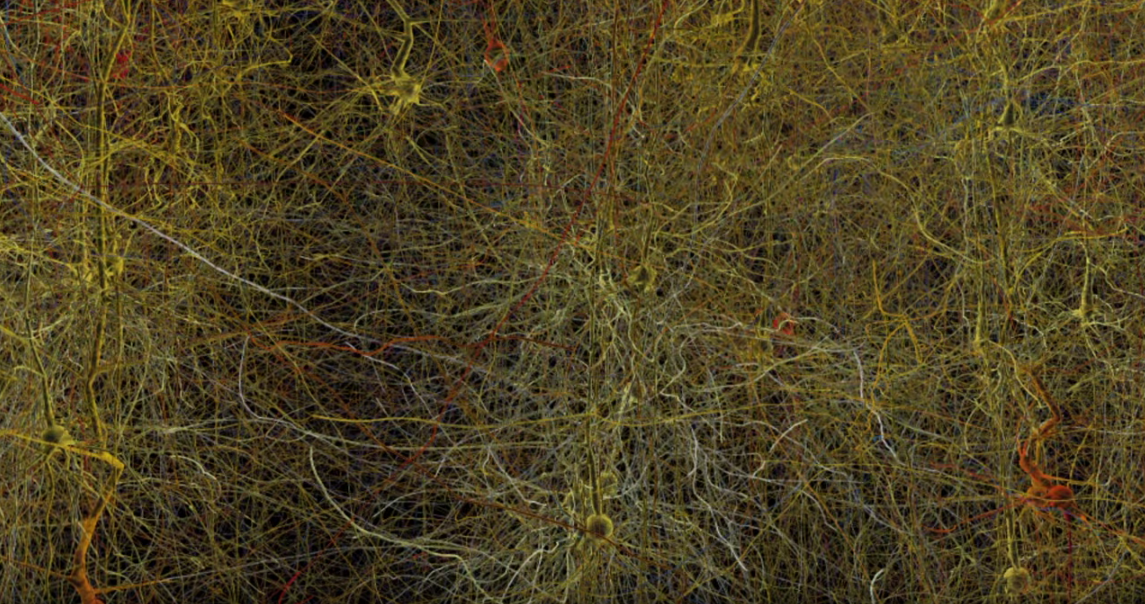

“Let me put it this way, there is a group in Manchester, UK, run by Steve Furber, who invented the ARM processor 30 years ago, so he’s one of the top computer architects. As part of the Human Brain Project, he is now building a neuromorphic computing platform, where he connects a million of these chips simulating a very large number of neurons plus an even bigger number of synapses, just like in the human brain. It’s basically a neural network like we have in our brain.

“And he says that this machine with 1 million cores when it’s finished, I think by the end of the year or early next year, will have the intellectual capability of 1 per cent of one human brain. I mean it’s a big machine, about the size of five bookshelves.”

Almost all absurdity of conduct arises from the imitation of those who we cannot resemble

Building on what I thought was a successfully structured question in difficult circumstances, or realising that my limitations had been found out, I decided to go space cadet. I asked how much of the human brain have we understood? Even I know that’s not a sensible question because no one knows what the intellectual limit of the human brain is, much less that of the human mind. But having already embarrassed myself, I had nothing to lose. I even asked the professor to put the sum of all human knowledge of the human brain in percentage terms. He may or may not have been humouring me, but he answered anyway.

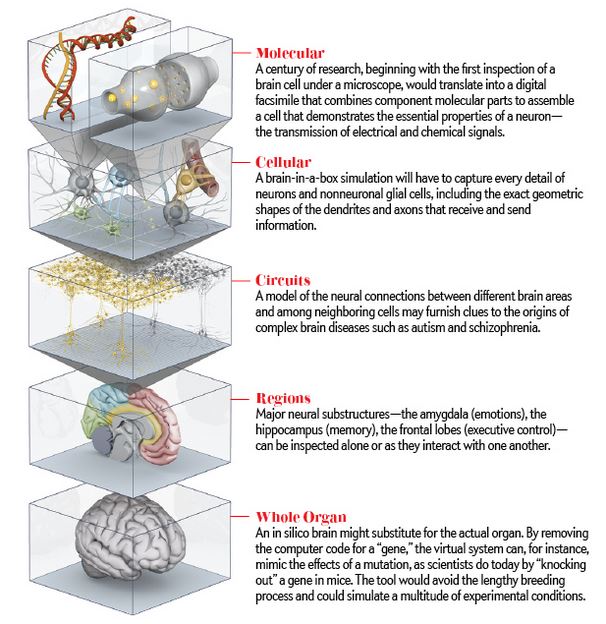

“Your earlier question was how much have we achieved, or how far have we gone down that particular road? I would say maybe just 1 per cent in terms of really implementing a technical system.

“When you ask me how much have we understood about the brain, that’s a very difficult question, which nobody can answer even now because you really have to differentiate between the individual layers, from the molecular level to the topology. This is an open-ended story and it’s difficult to say when it will end.

“What we can say is that we have understood enough to be able to map some of what we know about the brain to the technical systems we build, to hardware, to computer architecture, and also to algorithms that are of a new quality, and let us hope we will achieve some…”

He paused for a moment, and then said: “As you may be able to tell, I’m a bit reluctant to use the word ‘intelligence’ because the connotation is always that it’s human-like intelligence. But let’s say we can map our knowledge of the human brain to smarter machines, smarter devices, smarter appliances that sooner or later we will be able to buy.”

The next best thing to knowing something is knowing where to find it

The word “intelligence” is often used these days to mean “knowledge” or even “information”. Or, as Google search engine’s dictionary puts it, “the ability to acquire and apply knowledge and skills”. However, that’s not the way I understand it. The word “intelligence”, I read many years ago in Newsweek magazine, comes from the Latin word “intelligo”, which means something like to “choose between different options”.

I do accept that, as it says on the Simple English Wikipedia, that there is no general agreement on what intelligence is, but I don’t see the point of using the word “intelligence” interchangeably with “knowledge”, “information”, or even “the ability to learn” – for the the last one, I’d maybe use the word “aptitude”.

It’s demeaning to the word “intelligence” to dispossess it of its meaning, its distinction. Using it to mean the same thing as “information”, and those other things, robs us all of a special word, a word which unlocks the one truly human quality that roboticists like Professor Knoll are trying to emulate in their machines.

If I were to mutate the meaning of “intelligence”, I would probably go the other way, and elevate it to mean more than simply choosing between options. I would argue that it’s the ability to recognise that there are options in the first place. I’d take it closer to wisdom, or perhaps revelation.

Whatever your view, I think I understood Knoll’s reluctance to use the word intelligence in the context of a computer or a robot, but my view is that robots and computer programmes, AI-based or otherwise, do display a rudimentary form of intelligence. As they acquire more data through machine learning and deep learning and so on, they will make more informed choices and, therefore, become more intelligent. But such intelligence – no matter how much information it has to work with – is currently primitive at best, at least in the systems we know about.

Curiosity is, in great and generous minds, the first passion and the last

Having indulged myself in my own opinions, I will report what Professor Knoll said when I asked him to explain the distinction he was making between human intelligence and machine intelligence.

“The distinction I’m making is that human intelligence will only work in a human body because it will only develop in our body as we grow. It takes years to form it, to shape it, as the individual develops and of course along with the development of the human species.

“Whereas, if you are far from having such a body, and if you are far from perceiving the environment like we do with our special sensors, human senses, it’s very unlikely that we will see a similar development.

“What we can do is this: what we know about our intelligence we can map, we can somehow design into a computer system with a robot connected to it. But that is nothing that I would call intelligence because it has not emerged from what you might call an infant state to what we see as an adult’s full intelligence.

“What we project into the robot will always remain incomplete. It’s almost impossible I would say that we can, like some people believe, dump our mind into the computer and then put that computer into a body, that won’t work.

“It won’t work because even if you think that you put your brain into another human body, let’s assume that would work, right, that body is so different from yours that it’s very unlikely that you would be able to use it like you use your own.

“Don’t get me wrong, I’m a very strong supporter of developing AI. If we conduct these thought experiments, then we can learn a lot about ourselves. So, learning about what we can do, why our cognitive abilities have developed, and what would it be for a machine to develop in the same way. And given the state of the technology today, it’s impossible to say when that will be possible.

“I mean ideally you have a robot that grows and learns, and becomes like a human, but then if you have that, what would you need a robot for? We already have humans.”

He who makes a beast of himself gets rid of the pain of being a man

I don’t think the professor was setting intellectual traps for me, I usually do that to myself. But I couldn’t resist answering his rhetorical question. I suggested that maybe the reason why people want to develop humanoid robots is so that they wouldn’t have to treat them with equal rights, they could treat them as slaves, or as machines, to use perhaps a more palatable word.

“But that’s another question, right? When you have a robot that is like a human, maybe they would be like pets, or animals, and people would demand rights for them.”

I was wary of getting into a discussion about ethics and morality, or a consideration of any possible legal issues about giving robots rights, as my knowledge of such subjects is limited at best, but the Human Brain Project has an entire department studying these very questions. So I had to ask the professor, will robots be given rights?

“Yeah, that’s exactly the point. That would be very likely. Would you then be allowed, for example, to remove their batteries?”

He paused for a moment, and I realised he was asking me for an answer. I had absolutely no idea. And anyway, I’m a journalist. I just ask questions. Technically, and actually, I have no answers. So I had to send the ball back into his court.

He laughed and asked: “How much time do we have?”

I knew this time not to answer because this was definitely a rhetorical question.

He continued: “That’s another big issue. It’s all pure speculation at the moment. But clearly if you extrapolate that, you may well come to the point where you think, ‘Well, at least this robot has the intelligence of, let’s say, a cat or a dog because it does the same things’. It could be a guide dog, like the ones blind people use to show them the way.

“So it’s very likely that these robots will achieve that level of intelligence, and then the question is really, ‘These are machines we built, they are mechanical, so can we give them the same rights? Do they have a consciousness or something like that?’ So what is the essence of a personality that deserves to have some rights?”

It was becoming clear that he was reluctant to answer the question one way or the other, instead he was just sending back more rhetorical questions in my direction. But I tried again: do you think robots should have rights?

“I don’t think so, and for one simple reason, and that is that these are still… let me put it this way… at least as long as you can make sure they do not develop a personality of their own.

“And actually we are dealing with these ethics issues already not only in the Human Brain Project and with these autonomous cars. Also the question of virtual robots.

“What do you think? Do you think robots should have rights?”

This time, there was no escape for me. He asked a direct question and he obviously expected a reply. It’s not so much that I didn’t have an answer, but I was surprised that he was even interested in what I thought. Like I said, I haven’t got a clue. But I said what I thought anyway, which was that I don’t think robots should have rights because they’re not organic life forms that came into being as part of Earth’s natural processes, that they’re not part of the animal kingdom, for example.

Even as I said that I realised there must be so many flaws in my argument, and frankly, I don’t know what I’m talking about.

“It’s really difficult,” said Knoll. “There are many arguments I could list, and there are of course people who do this full time, think about the ethics of robotics, But it’s still only speculation.

“However, if you say, ‘Okay, this Google car develops a life of its own because it drives around and so on’, and if you are inclined to be generous, then you could say, ‘It doesn’t have a driver, but you could assume there’s a driver inside, would that driver have rights, would that driver be responsible to someone?’, then it doesn’t really matter if there’s a driver inside or a computer. But it doesn’t really matter whether there’s a computer inside because that computer is clearly developed and programmed by a human. So is that human then responsible?

“There are many, many questions, and they’re really difficult to answer.

“Nevertheless, these questions are important because if we don’t answer them in a satisfactory way, this will be a major obstacle to the development of these cars as a commercial products.”

The road to hell is paved with good intentions

Mind-bendingly weird as the idea of a car driving itself is, autonomous vehicles seemed to offer much more sensible and safer parameters for this discussion, particularly as we were entering hypothetical scenarios which may or may not become reality. And anyway, I have a sneaky suspicion that by the time society arrives at the point of having to decide on rights for artificial life forms, society will have already changed to a point where the question itself becomes almost meaningless, in that, of course robots should have rights – everyone and everything else does, even animals, so why not robots? Or some sort of thinking like that.

But while you can still vandalise a car without going to jail for grievous bodily harm, I thought I’d ask a more simple question of the professor. Would he feel safe in an autonomous car?

“Yes, of course, I would feel comfortable in an autonomous car. My own views and feelings are, I have been a roboticist for many, many years now, and I would be pleased to be driven in a driverless car provided of course I can be sure the technology works. I see no reason why I should trust a driverless car less than an autonomous aeroplane.”

He was talking about the autopilot system installed in the vast majority of planes operating today. Many airports actually require that planes use autopilot to land on their runways. However, they could be classed as pilot-assistance systems when landing, whereas when planes are flying tens of thousands of feet high through the air, it’s well known that they are often on full autopilot.

“What is the difference? The difference is that the environment is much more complicated in the case of the car. That’s basically the only difference. But when it comes to decision-making, sensing, controlling, the actuators, there’s not much of a difference between a car and and an aeroplane.”

Whatever withdraws us from the power of our senses; whatever makes the past, the distant, or the future predominate over the present, advances us in the dignity of thinking beings

So, almost closing time, and we’d brought the conversation down to more formulaic questions. The perennial favourite about the future had to be asked, of course.

“We will be concentrating on the Human Brain Project. There are people who are busy with ethics, but that is not in the focus of our research. But we are working on the basic principles of the functions of the human brain at various levels, and we are trying to use their data, and also data from our own institute, Echord, and what is available around the world, to develop brain-derived controllers for robots. And what we are doing here is that we implant this into soft bodies of which we have quite a few. Some of them are animal-like, some of them are human-like.

“And at the same time we are developing a simulation system so that we will be able to virtualise our research, which means that we can do the same experiments with robots in the real world and in the computer.

“This is a new approach, which we can only do because we have these high-performance computers now, and it would not have have been possible 10 years ago. We have very good models of the physics of these robots which we calibrate with the real robots we are using so that we can really accelerate that research.

“We just model our robots in the computer. When we are happy with what we see there, we can try to translate that back into the real world. So what we are doing is a virtualised version of robotics research and robot development, and I think that will have a major impact in the field. And it’s only possible because we have this funding from the EU for this large-scale project.”

And with that ended the interview proper and it was time for me to boast about other interviews I had lined up, including one with a former colleague of Professor Knoll, Dr Reinhard Lafrenz, who was recently appointed secretary general of industry association euRobotics.

The word “knol”, I read somewhere, means a unit of knowledge. It was actually the name of a Google website, aimed at academics, which closed in 2012. As a name given to people, it has its roots in English-German history. I don’t know any more about it.

What I do know is that the first syllable of Boswell – “bos” is very similar to the Bengali word for “understand”. The slight difference is phonetic, in that it’s pronounced büz. What this really means I don’t know. I’d probably need to talk to a professor of secret societies for that one.

(Main picture: Professor Alois Knoll, chair of real-time systems and robotics, stands between two tendon driven robots developed as part of the EU project Eccerobot at the Technical University in Munich, Germany. Knoll coordinates the neuro-robotics division of the EU Human Brain Project. Photo: Frank Leonhardt.)