Why law, governance and trust matter as much as technology

Autonomous vehicles are often presented as a technical inevitability. Sensors improve, software advances, compute power grows, and the vehicles themselves become steadily more capable.

Yet despite decades of development and thousands of pilot programmes, autonomous vehicles remain rare on public roads.

The reason is not a lack of technology. It is a lack of permission.

Autonomous vehicles operate in shared public space. They interact with people who did not opt in, under legal systems built around human responsibility and social norms developed over more than a century of driving.

As a result, regulation and public acceptance are not secondary considerations. They are the conditions that determine whether autonomous vehicles move beyond trials into everyday use.

In this sense, autonomy is not primarily an engineering challenge. It is a governance challenge.

Why regulation exists – and why autonomous vehicles strain it

Road transport has always been heavily regulated. Vehicle design, emissions, safety features, licensing, insurance, and liability are all governed by layers of law. These frameworks exist to manage shared risk, not to slow innovation.

Autonomous vehicles challenge several of the assumptions that underpin this system. Traditional regulation assumes a human driver who can be licensed, trained, blamed, fined, or prosecuted.

When control shifts to software, responsibility becomes diffuse. Is the “driver” the manufacturer, the software developer, the fleet operator, or the vehicle owner?

Regulators are wary of answering these questions prematurely. Public roads tolerate a surprising amount of human error, but they are far less forgiving of machine error.

A single high-profile incident involving autonomous vehicles can undermine public confidence far more quickly than thousands of routine successes can build it.

This is why comparisons with consumer technology often fail. Smartphones and software platforms can be deployed at scale with minimal regulation. Transport cannot.

Aviation and rail offer better parallels: both are heavily automated, but governed by strict certification, slow iteration, and conservative change management.

Autonomous vehicles are being pulled into that same regulatory gravity.

A fragmented global regulatory landscape

There is no single global framework for autonomous vehicles. Regulation is emerging unevenly, shaped by political structures, cultural attitudes to risk, and economic priorities.

Most jurisdictions have adopted a cautious, incremental approach. Limited testing permits come first, often requiring safety drivers and detailed reporting.

Commercial deployment, if allowed at all, is constrained by geography, speed, or operating hours. Full autonomy without human supervision remains rare.

This fragmentation creates friction for developers. A system approved for use in one city or country may be illegal in another, even if the technical capability is identical. Scaling therefore becomes a regulatory challenge as much as a technical one.

At the same time, regulators are under pressure. They must balance innovation, safety, economic competitiveness, and public trust, often with incomplete data and rapidly evolving technology.

The United States: decentralised, political, cautious

The United States illustrates both the opportunity and the difficulty of autonomous vehicle regulation. Federal agencies set broad safety guidance, but states control licensing, testing permissions, and deployment rules.

Cities and municipalities often exert additional influence through zoning, labour agreements, and local politics.

This decentralisation encourages experimentation but complicates scale. Autonomous vehicle deployments in the United States tend to be city-by-city, negotiated individually, and shaped by local conditions. Political cycles matter. Labour concerns matter. Public opinion matters.

High-profile incidents have also shaped the regulatory mood. Even when autonomous vehicles perform statistically well, isolated failures receive intense scrutiny. Regulators respond by tightening requirements, demanding more data, and slowing approvals.

The result is a landscape where autonomous vehicle development continues, but widespread deployment remains constrained. The emphasis is on caution, documentation, and accountability rather than speed.

China: faster iteration under looser constraints

China presents a striking contrast. While regulation certainly exists, it has historically been more permissive in early-stage testing and deployment.

Large-scale trials have been encouraged, data collection has been extensive, and the relationship between regulators and industry has been more integrated.

This relative leniency has allowed faster iteration. Autonomous driving features have been tested across broader geographies and larger vehicle fleets. New business models have been explored more aggressively. Innovation cycles are shorter.

This does not necessarily mean Chinese vehicles are less safe. It means risk is managed differently. Testing at scale is treated as a path to improvement rather than a liability to be minimised.

The long-term implications are unclear. Looser regulation can accelerate learning, but it can also accumulate hidden risk. As Chinese manufacturers expand into global markets, they will increasingly need to meet the regulatory expectations of Europe and North America.

In practice, this is already happening. Chinese carmakers building factories in Europe and the Americas are designing vehicles to meet local safety and regulatory standards. Autonomy, like emissions or crash performance, becomes part of a localisation strategy.

Regulation as a competitive advantage – or a brake?

Western governments have often led on regulation that later became global norms. Environmental controls provide a useful precedent.

The introduction of the catalytic converter, driven by emissions regulation, initially increased costs and complexity for manufacturers. Over time, it improved air quality and set standards that reshaped the global industry.

A similar pattern could emerge with autonomous vehicles. Stricter regulation may slow early deployment, but it may also produce systems that are more robust, more transparent, and more trusted internationally.

Whether this proves to be a competitive advantage remains an open question. The risk is that excessive caution delays learning and allows other markets to define the pace of innovation. The counter-risk is that premature deployment damages public trust in ways that take years to repair.

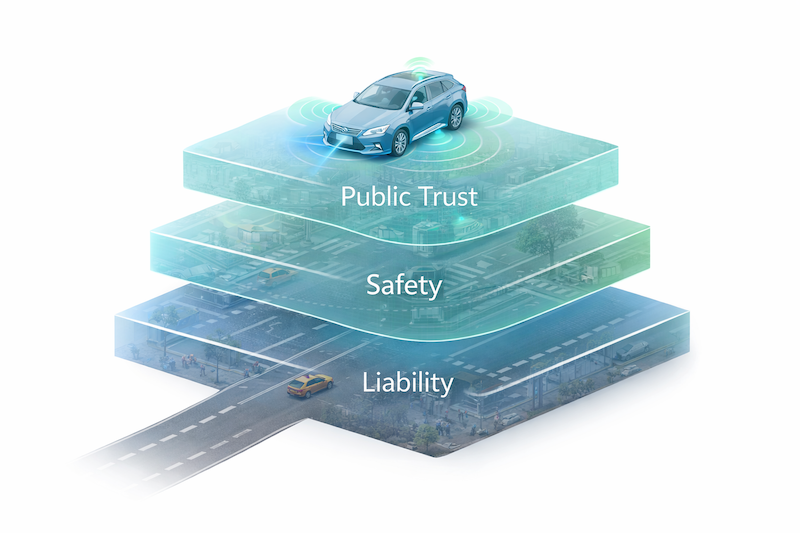

Liability: When responsibility moves from humans to systems

Liability is one of the hardest unresolved issues in autonomous vehicle deployment. When software makes driving decisions, traditional fault models break down.

Manufacturers may face product liability claims. Fleet operators may carry operational responsibility. Software suppliers may influence outcomes without direct control. Insurers, meanwhile, must price risk without long historical baselines.

This uncertainty affects behaviour. Companies design systems defensively. Regulators demand conservative safeguards. Deployment slows.

Clear liability frameworks do more than allocate blame after accidents. They shape system design, business models, and public confidence. Without clarity, every incident becomes a referendum on autonomy itself.

Public acceptance: Trust is not a technical metric

Even if regulation were resolved, public acceptance would remain a challenge. Trust in autonomous vehicles is shaped less by statistics than by perception.

People tolerate human error because they understand it. Machine error feels different. It suggests loss of control. Media coverage amplifies this asymmetry, focusing on failures rather than routine operation.

There is also a psychological dimension. Roads are shared social spaces. Unlike factories or aircraft, autonomous vehicles operate among pedestrians, cyclists, and human drivers who did not choose automation.

This helps explain why automation has been accepted more readily in aviation, rail, and industrial settings than on public streets.

Transparency and explainability as trust-building tools

One of the most effective ways to build trust is transparency. When autonomous systems are treated as opaque black boxes, suspicion grows. When limitations are acknowledged openly, trust tends to improve.

Incident reporting, clear communication of operating boundaries, and honest explanations of failure modes matter. Explainability is not just a technical challenge; it is a governance tool.

Admitting that autonomous vehicles work well only in specific conditions is often more credible than claiming universal capability.

Gradual deployment works better than bold promises

Most successful autonomous vehicle deployments share a common feature: constraint. They are geofenced, speed-limited, task-specific, and heavily monitored.

Autonomous shuttles, freight corridors, and robotaxi zones allow regulators and the public to see systems working reliably in defined contexts. Confidence builds incrementally.

The danger lies in overpromising. Claims of imminent, universal autonomy raise expectations that the technology cannot yet meet. When those expectations are disappointed, public trust erodes.

Labour, jobs, and social consent

Concerns about employment are inseparable from public acceptance. Professional drivers represent a visible workforce, and debates about autonomous vehicles often become proxy debates about inequality and economic transition.

Ignoring these concerns slows regulatory approval. Addressing them through phased adoption, retraining, and honest discussion tends to accelerate it.

Social consent matters. Autonomous vehicles will not succeed if they are perceived as benefiting technology companies at the expense of workers and communities.

What regulators actually want

Contrary to some industry narratives, regulators are not hostile to autonomy. They want predictability, evidence, and responsibility.

They want systems that behave consistently, companies that communicate clearly, and operating models that can be supervised. Demonstrations matter less than long-term reliability.

The shift underway is subtle but important: from permission to test, toward permission to operate. That transition depends on governance, not spectacle.

Trust as the final milestone

Autonomous vehicles will not arrive everywhere at once. They will appear first where regulation allows, where trust has been built, and where use cases are clear.

Technology will continue to improve, but widespread deployment will depend on law, liability, and public confidence. Regulation and acceptance are not obstacles to be overcome; they are infrastructure to be built.

Trust, ultimately, is the final milestone. When societies believe autonomous vehicles are understandable, accountable, and aligned with public interest, deployment will follow. Until then, autonomy will advance carefully, unevenly, and under supervision.