For more than a decade, Intel’s RealSense cameras have been synonymous with 3D perception – powering robots, drones, and biometric systems with the ability to sense and interpret the physical world.

Now, after spinning out from Intel, RealSense is charting its own course as an independent company focused squarely on what it calls “Physical AI”: equipping machines with vision that is not just human-like, but in many cases better than human.

At the centre of this transition is Mike Nielsen, chief marketing officer at RealSense, who is helping define the company’s identity beyond Intel’s shadow.

Nielsen and his team believe that robotics and automation are moving into an era where perception will make the difference between prototypes and scalable, commercially viable systems.

From autonomous mobile robots navigating crowded warehouses to humanoids working alongside people, machines now require real-time depth, accuracy, and reliability across unpredictable conditions – challenges that traditional cameras and sensors have struggled to meet.

In this wide-ranging conversation, Nielsen explains how independence has unlocked new momentum for RealSense, from faster product development to deeper industry partnerships.

We discuss why robots need “better than human” perception, how different depth technologies – monocular, stereo, and time-of-flight – stack up against each other, and why RealSense claims such dominant market share in both humanoids and AMRs.

He also shares details of the company’s latest depth camera innovations, its push toward higher safety certifications, and its vision for fusing sensors to reduce reliance on costly lidar.

The result is a candid look at how RealSense plans to shape the next wave of robotics, biometrics, and machine intelligence – one depth camera at a time.

Q&A with Mike Nielsen, CMO at RealSense

Robotics and Automation News: Why did RealSense spin out now, and what’s materially different about RealSense’s strategy, pace of product development, and customer focus as an independent company versus under Intel?

Mike Nielsen: RealSense was incubated at Intel, where we refined product-market fit and focused on AI-based computer vision for robotics and biometrics.

That foundation established RealSense as the trusted standard in 3D perception. Our mission remains the same – delivering world-class perception systems for Physical AI – but independence has unlocked new momentum.

Now we have the latitude to deepen industry partnerships, expand global go-to-market, and invest in roadmap priorities not strategically relevant to Intel.

With agility, focus, and capital, RealSense is scaling faster, capturing new markets, and leading the next wave of robotics and biometric innovation.

R&AN: You’ve said delivery robots need “better than human” perception to cope with messy, unstructured environments. What specific perception benchmarks (latency, range, depth accuracy, HDR, failure rate) define “better than human” for you – and how do you measure them in the field?

MN: Perception needs can vary pretty wildly for different environments, as you can imagine. A robot traveling at 6kmph may only need 1m precision at 30m, but 1cm at close range to park itself on a charging pad or a loading station.

Robotic arms may need to see within 1mm to delicately grasp small objects safely. And they all need to do this under variable lighting and environmental conditions, so parameters like exposure and gain need to be automated, particularly when a robot transitions between dark and light environments.

It’s something human eyes are actually quite bad at. Another area where AI-based vision perception becomes very apparent is in biometrics. Humans aren’t great at differentiating faces of people they don’t know well, with more than 80 percent similarity.

But systems for those applications need to be 99.9+ percent accurate, because not letting in the right person is one thing, while letting in the wrong person is very much something else.

Lastly, latency. Robotics systems need to ingest extraordinary volumes of data in real-time, from multiple sensors and cameras, and need to make real-time decisions from them.

With our recent announcement, integrating our D555 with the Nvidia Holoscan Bridge, we are reducing the “time to decision” for a robot significantly over traditional CPU and bus-based systems, because the video and depth data is piped straight to the GPU with no overhead.

R&AN: Depth with one lens vs two: for readers who wonder how monocular systems infer depth, can you compare monocular depth estimation (learning-based/structure-from-motion) with stereo (two lenses) and ToF/active IR? Where does each win/lose on accuracy, compute, power, and cost for, say, AMRs?

MN: The main benefit of monocular depth inference is that you can use cheap RGB sensors and reasonably lightweight computing. The downside, of course, is accuracy, which comes in multiple dimensions.

While you can get reasonable distance accuracy – say 85 percent – of a stationary and simple object, that falls off significantly when you change lighting, move the object, partially occlude the image, or any other real-world things that happen when you operate in the physical world.

For some long-range applications where you need to determine rough estimation of distance in static environments, and even those where you are inferring motion direction, it can be fine.

But even in those applications, there’s too much variability, assuming you can live with 60-80 percent accurate measurement in the first place.

We do stereo-calculated distance using visible and IR, which gives us highly accurate measurements under variable lighting conditions and for both objects and robots in motion, down to the sub-mm level.

The main benefit of a stereo camera is that the distance computation is handled by a specialized SoC on the camera itself, so the robot’s compute can handle tasks like safety and mission completion.

This significantly lightens the load, especially when dealing with a robot equipped with multiple active sensors, as most of them today employ four to six cameras for 360-degree perception, with the overlap required to accommodate occlusions and obstructions.

R&AN: RealSense claims strong adoption in AMRs and humanoids. Can you quantify current market share by shipped units or active deployments, and name a few flagship customers or platforms where your cameras are standard?

MN: We continue to see extraordinary growth in the humanoid space, and some of our customers are asking us if we’re capable of scaling to millions of units per year.

Based on our market knowledge, we are deployed in over 80 percent of the humanoids produced today. We expect several key applications to go mainstream fairly quickly in various markets.

AMRs continue to grow at a high rate as factories, warehouses, and other environments are optimized for their use.

At the same time, AMRs are beginning to operate in highly dynamic environments, signaling a shift from static paths and an opportunity for even greater growth. Our internal analysis shows that we are deployed in approximately 60 percent of AMRs today.

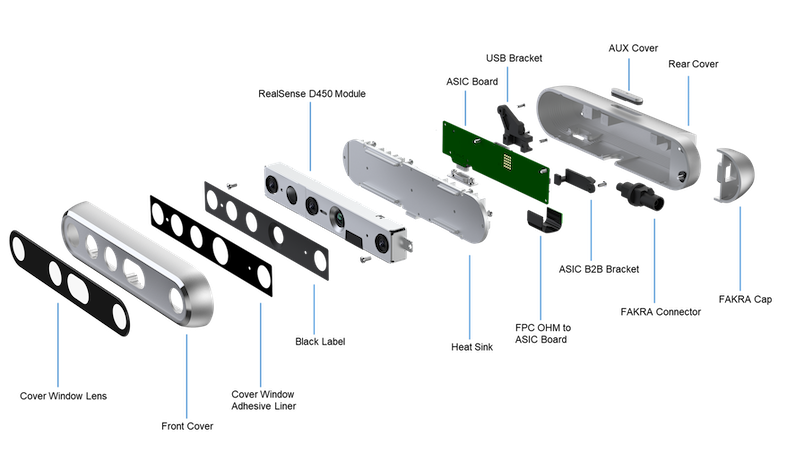

What’s new under the hood in the latest depth cameras (for example, D555 and the Vision SoC V5)? What edge AI models run on-device today (object detection, segmentation, tracking), and what latencies are you seeing from photon to plan?

The D555 has a new SoC, which we call v5, and has some significant innovations that will allow us to ramp up the entire new D500 series family over the coming months.

This includes dramatically increased processing capabilities with five TOps available compute and on-board DSPs to radically improve image quality and reduce noise, as well as other capabilities that allow us to build new cameras in a more modular way.

While we have not yet exposed the D555 processing to the developer community, the underlying infrastructure for image processing will enable multiple model types to reside on the device, further offloading the core compute from perception tasks. Stay tuned – more details coming!

Safe navigation around humans: which safety standards and tests do you design toward (for example, ISO 3691-4, ANSI/RIA, ISO 13482)? What incident modes (glare, transparent/black objects, low light, rain/snow, occlusions) are still hardest, and how are you mitigating them?

As you are aware, some of the most challenging conditions to manage include highly variable lighting, harsh reflections, and transparent objects.

While we have already achieved SIL-1, our customers are pushing us towards advanced reliability, and we are working eagerly towards SIL-2 certification, guided by IEC 61508.

R&AN: Sensor fusion: where do you stand on stereo + IMU + lidar integration for delivery robots? Do you see stereo replacing low-cost lidars, or is the winning stack a hybrid, and why?

MN: You rightfully point out that the sensor stack needs to be fused, because no single traditional method is adequate to accomplish all of the tasks currently required. Additionally, the market is conditioned to accept that you need those three sensor types, but we see that changing.

IMU integration was a first step, as it significantly reduces cost and complexity. Lidar remains a sticking point, but there are limitations in how it is used today – primarily in 2D, so you can see a plane of objects, but without the intelligence of what lies above or below.

This is immensely helpful when you are in a static environment, but to move to more dynamic situations where there needs to be perception in 3D, your tech stack starts to look different.

Should I invest in migrating 2D lidar-based safety systems to 3D lidar, and can I justify the cost of a 3D lidar in my system? Or can I just use my stereo cameras for the same thing?

Our customers have been pretty clear with us that they would like to leverage their investment in stereo vision into the space that lidar currently resides.

The cost alone is a major driver, with a 10x difference between a 3D lidar system and a stereo camera. But it’s also the development investment; you’ve already got stereo vision for general perception on the robot in the first place, why not use it?

R&AN: Developer experience and TCO: how do you price and support SDKs, calibration, and long-term maintenance? What is the typical time for a robotics team to go from “hello world” to reliable obstacle avoidance using your stack?

MN: Our SDK is free and open source. We believe it’s one of our key differentiators, as it allows our developer partners to get up to speed quickly with proven code and applications. In simple cases, we’ve seen robots up and running in a matter of hours.

Of course, that’s for a two-wheel robot doing simple navigation and avoidance tasks. But even more complex implementations, like multi-sensor, dynamic navigation, 6DoF, and humanoid, leverage the same common framework in SDK2.0 across our entire family of cameras.

This simplifies platform choice, allowing customers to optimize for each capability – min-Z, FoV, filter requirements, and so on. We have seen new systems from customers come online to production quality in as little as six months.

R&AN: Roadmap: what coming capabilities (global shutter across the line, higher dynamic range, event-based sensing, longer baseline options, on-camera SLAM, PoE variants) should robotics teams plan around over the next 12 to 24 months?

MN: Can I say all of the above? We’re examining several significant capabilities, including modular connectivity, on-device algorithm processing, pre-optimized SLAM, image processing, and a range of other features.

Our new SoC, and the independence of being a standalone company, allow for us to accelerate delivery of new products in the coming quarters – it’s very exciting to see what’s on the horizon for us.

R&AN: Beyond delivery: RealSense also talks about biometrics and access control. How do you reconcile performance goals with privacy, spoof-resistance, and on-device processing, especially for customers operating under EU/US regulations?

MN: We spend a lot of time helping customers understand that the regulations, particularly in Europe, are our friends. They can actually help accelerate adoption.

The short answer is that our biometrics platform is privacy-first. What we mean by that is simple. We have no knowledge of any single person, nor do we store that data, even if it was given to us; our camera can’t recognize your face without opting in first; your template belongs to you.

All biometric processing is handled on the device, and there is no PII associated with it. The image is destroyed after analysis. What you’re left with is a 500-byte hash, or template, which is a numerical representation of your face generated by our proprietary algorithm.

Spoofing is something that’s extremely hard to accomplish with 2D RGB and in real-time. We had to employ several techniques to ensure 2D, 3D, and electronic spoof-mitigation, which resulted in a 1:1M spoof rate.

To do this in real-time, without compromising privacy, while keeping costs low is not a trivial technology problem to solve.

One of our close partners, Ones Technology, deploys our biometric cameras in a 1:1 template matching mode. This starts by generating a template, either from a live scan of a person’s face or from a reasonable quality image such as a jpeg.

This template can be stored in a QR code (which they can print and carry), deployed to a smart access card, such as Legic or HID, or sent to their mobile wallet. When their ID needs to be verified at a terminal, they show their QR code or present their credential card.

The terminal device reads the template, scans the person’s face, then matches their live face template scan to what is stored on the card or QR. No data ever leaves the device, no images are stored or processed, no PII is exchanged.

It’s a 1:1 match. And it’s largely foolproof. It’s an incredibly elegant way of maintaining privacy while ensuring identity verification.