Agentic science. A quick glance at the term and you might think of something very complex, but the end goal is to use artificial intelligence (AI) “agents” to advance scientific research with ease. And while these agents aren’t top secret, they are highly skilled and trained.

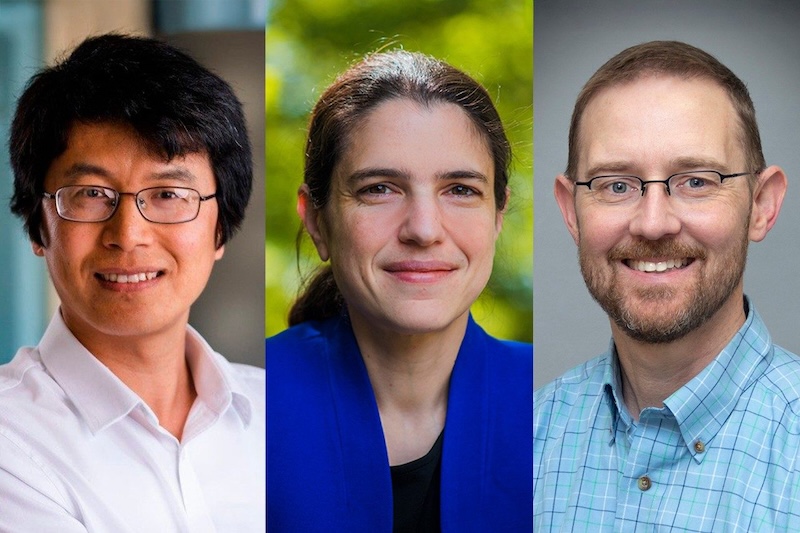

Hongliang Xin, Virginia Tech chemical engineering professor, along with collaborators John Kitchin from Carnegie Mellon University and Heather Kulik from the Massachusetts Institute of Technology, published a commentary in Nature Machine Intelligence exploring the foundation and frontiers of agentic science, outlining its emerging uses, limitations, and pathways for responsible integration into scientific practice.

In a recent conversation, Xin, Kitchin, and Kulik discussed the emerging paradigm of “agentic science” and what it means for the future of research.

What is agentic science and how is it different from other forms of science?

Xin: Agentic science is a new paradigm of discovery in science in which AI is taking a central role in reasoning, planning, and making actions in the scientific setting environment, both digital and physical. You can think about the digital portion as supercomputing infrastructures and the physical as a physical lab, like those at a university.

Agentic science is like an orchestra. Today, a human scientist is like a single musician playing one instrument. In the future, AI agents will act like entire sections of musicians, each adding their own part.

Together, human scientists and AI agents can create richer melodies and harmonies than either could alone. In this vision, the human scientist also serves as the conductor, guiding the orchestra and ensuring all the players, human and AI, stay in sync.

Where do you see agentic science having the biggest impact?

Kulik: Agentic science has the potential to enable scientists to test and develop hypotheses that we had not previously developed, but it also is well positioned to automate things that were previously tedious time sinks in the lab, really transforming the speed at which we can innovate in a range of areas.

What excites you most about this research and the future of science using AI agents?

Kitchin: We can do things today with AI agents that were not possible even a year ago. Today we have proof-of-concept agents that can search the internet, scientific literature, and local files to gather information that can then be used by a different agent to plan a set of experiments.

Kulik: Because of the speed and efficiency of AI agents, we will have the chance to innovate in areas where traditional trial-and-error experimentation has failed to identify solutions.

Environmental stewardship and health are two areas that could greatly benefit from agentic science. But along the way we will also learn more about the discovery process as we interact with and leverage these models.

Why is now the right moment for AI agents and autonomous labs?

Kulik: Changes in language models and the development of approaches to train many-billion parameter models on very large datasets has recently enabled agentic science. Autonomous labs go hand-in-hand with these developments.

Xin: Another reason is the explosion of information. There are so many scientific publications that scientists are overloaded. They don’t have time to digest all the developments and apply them to their current work.

In the last two or three years, AI has become very good at digesting information, particularly large language models. They can understand context in ways no human scientist can.

Another reason is the growing emphasis on cloud labs and robotics. Automation has become essential to accelerating experiments. AI agents almost serve as a “brain” and make decisions for the lab. In order to develop a self-driving lab that can make reliable discoveries, you need a powerful brain. That’s where AI agents come in.

What safeguards are being built to make sure AI systems are safe and trustworthy in the lab?

Kitchin: I think having the “person in the loop” is the most important way to make sure AI is safe and trustworthy. That is the same way we handle making people safe and trustworthy in the lab.

It is not generally possible to foresee all the ways something could go wrong, or how something harmful might also be beneficial (and vice versa). People should always be responsible for what they do in the lab, and I don’t think AI changes this fundamental responsibility.

Why is collaboration with other research institutions important when it comes to topics like this?

Kitchin: There are so many dimensions to AI agents, and so many problems they can be applied to, that no single group can cover the breadth of opportunities. It is important to talk with other researchers to learn from their experiences and the problems they seek to solve.

AI agents are not meant to replace these interactions, but instead, they should make more time for them. If everyone is developing their own agents, and not learning how to use the work of others, we probably will have missed the point.