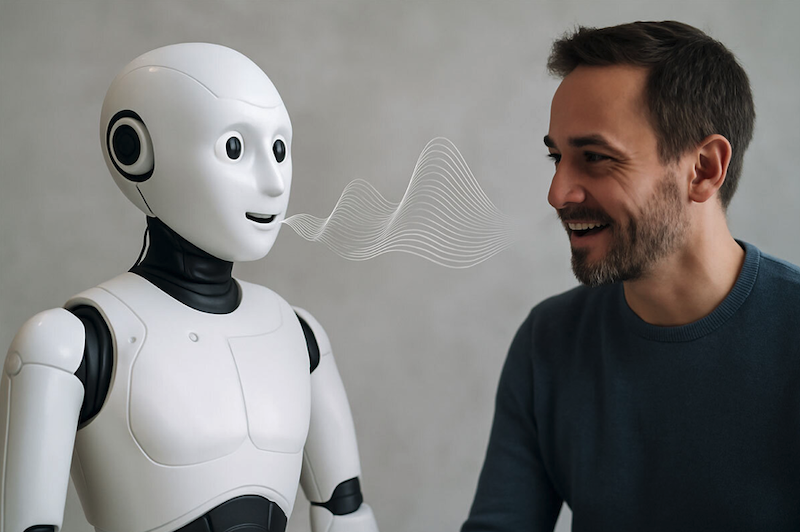

Today’s robots aren’t limited to the factory floor – they’re guides at airports, assistants in hospitals, and companions in homes.

As engineers have refined their mechanics and coding, there remains one aspect keeping them from really flawless human interaction: voice. Not merely the capacity to speak but the capacity to sound natural.

We are moving into a new era where computer-generated voices must reflect true human expression – warmth, hesitation, excitement, and empathy.

It is no longer okay for robots to have the kind of flat, answering machine-quality voices of the ’90s. The capability of a voice to communicate like a human being is quickly becoming a necessity, not an option.

Here is where technology such as an AI TTS API comes into play, enabling developers to provide robots with realistic speech functionality that sounds and feels natural, emotional, and human-compatible.

Natural Speech Makes Robots Feel More Emotionally Conscious

We are linked to one another by voice – its intonation, cadence, silence, and stress. These aren’t audio mannerisms; these are the ways we interpret mood, intention, and feeling. This is why when a robot reads in a tinny, flat voice, it sounds inaccessible, even when it’s doing something useful.

Picture a robot caregiver reading an elderly patient his schedule of medication. A staccato delivery may be confusing or offensive. But a more nuanced, natural voice conveys reassurance, even caring. When robots can talk in ways that sound emotionally intelligent, they move from objects to companions.

Natural speech humanizes robots. It enables machines to bond on a level beyond operations – something that’s imperative in environments such as elder care, childcare, or hospitality.

Sounding Human Aids Robots in Building True Trust

We don’t only listen to what robots tell us – we respond to the way they say it. Particularly in settings where trust is paramount: hospitals, disaster areas, and customer service counters. A robotic-sounding robot can create users who feel uncomfortable or less inclined to obey its directives.

A well-engineered voice can soothe, convince, and even establish rapport. It’s just like people are more likely to believe friendly, sympathetic voices than chilly or clipped ones.

Voice design is not only visual; it’s psychological. When robots communicate in a natural voice, people are more prone to listen, collaborate, and even share secrets with them.

This trust-generating capability makes natural AI voice an engaging tool for robotic integration into daily life.

Real Dialogues Require Realistic Robot Voices

It’s not sufficient that robots talk. They must talk back. Genuine back-and-forth dialogue depends on rhythm, timing, and the emotional quality of voice. When a robot stammers or accents the wrong word, it interrupts the flow of conversation and leaves one confused.

To enable robots to have actual conversations – whether they’re answering questions, assisting with directions, or providing assistance – they require voices similar to human speech patterns.

The tone of voice, hesitation, and even slight sighs or pauses in speed can make an exchange feel surprisingly human-like.

Voice is how we decode purpose. With expressive AI-synthesized speech, robots can now have conversations that feel natural, not awkward.

Clearer Voices Make Tech Easier for Everyone

Natural AI voice is also important for accessibility. For visually impaired, older, or fighting cognitive battles users, voice is the primary interface for communication.

Consider that same interface speaking in stuttering, robotic bursts. It’s annoying and, in the long run, exhausting mentally. A clear, expressive voice is simpler to process, which results in more successful task completion, less anxiety, and greater comfort.

Robots deployed in eldercare facilities, rehab centers, and schools are already demonstrating the power of a soft, natural voice to reshape the experience. When robots communicate like humans, more individuals can be helped, irrespective of age or capability.

Improved Voice = Reduced Mental Effort in Interactions

Ever struggled to follow a GPS voice that speaks too quickly or as if underwater? That frustration multiplies when interacting with robots in high-speed settings such as factories, airports, or emergency response areas.

An awkward voice interface adds to cognitive load – users have to do more work to comprehend, remember, and respond. But a natural, well-spoken voice is intuitive. It takes less mental effort, making it easier to follow directions and interact.

Where rapid decisions are a matter of life and death, natural voice is not a nicety – it’s a requirement. It will make robots better partners, not merely better machines.

Conclusion: The Robots That Talk Like Us Will Win the Future

The future of robots is not necessarily more improved arms or higher-speed processors – it’s more presence. And nothing characterizes presence like voice. When a robot has good speech, it doesn’t merely do its job. It gets people to feel heard, respected, and even emotionally cared for.

Whether helping provide disaster relief, assisting children with homework, or navigating travelers through transport hubs, robots will have to deal with natural speech to be successful.

Software such as AI TTS APIs provides developers with the ability to facilitate this transition, allowing machines to speak like we do.

As we graduate from beeps and boops, the human-sounding robots that fit right in among us are the ones that will make it happen.