From the biggest to the smallest: Hitachi takes first nimble steps in robotics market

Exclusive interview with Dong Li, research engineer at Hitachi R&D Europe, about the company’s new humanoid robot, Emiew

At street level, the Barbican area of London, England has the strange quality of looking like a small underground city. But it’s actually not.

True, it has an Underground railway station, but the rest of the commercial and cultural district – with its museums and office blocks – is mostly above ground.

It may be because the buildings are so imposing that they overshadow the often narrow streets and block out the sun in some places. The bridges overhead connecting one set of buildings to another, or one side of the road to the other, and the tunnels that go underneath the building complexes themselves, exacerbate the feeling of being underground.

But the noise-cancelling effect – or something – of so many densely packed, large structures probably makes it a good area to set up a research and development centre. Hitachi obviously thought so anyway.

Hitachi, the massive Japanese conglomerate with a market capitalisation of around $35 billion, has located its European R&D centre in the Barbican area of London, and although the company’s main business is in giant infrastructure and transport projects, the office is also a hub for its activities in the area of robotics.

Hitachi makes a lot of electrical equipment for business and consumer markets, but robotics is not something it’s been involved in before to any great extent.

Hitachi spends approximately $3 billion worldwide on its research and development activities.

And, given its background in construction and other large-scale projects, it’s surprising that the first robot it has produced for the market is a tiny little humanoid called Emiew.

Robotics and Automation News asked for an invitation to visit Hitachi’s Europe R&D centre to meet Emiew and have a conversation with the talking machine. (See video below.)

Exist long and interact

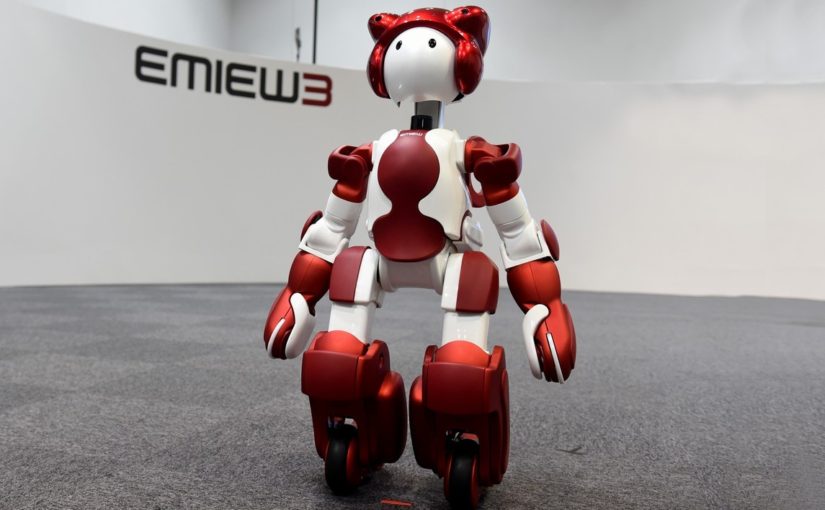

Emiew, short for Excellent Mobility and Interactive Existence, is probably to be listed under the service robots category, or assistive robots.

It’s about a metre or so tall, and it gets around using wheels, and perhaps the most important thing it does is communicate, especially since Hitachi says it’s designed for customer service.

Similar robots at other companies and academic organisations have been used for R&D purposes, but Hitachi is looking for business applications for the product because it’s been shown that there is money in this market.

Maybe it’s a drop in the ocean from Hitachi’s point of view, since the company generated revenues of almost $90 billion last year, part of it through shipbuilding, but Hitachi doesn’t want to miss out on what could be a massive market in the future.

The other humanoid robot that is similar to Emiew – in terms of dimensions and colour scheme at least – is Nao, which is one of SoftBank Robotics’ products.

Nao retails for around $10,000 a unit. Maybe you can buy in bulk and get a discount, but even at half price, that’s a lot of money for a little robot.

SoftBank says it has sold 10,000 of the Nao robots, which is a total of $100 million in revenue, or $50 million at half price. Needless to say, that’s a lot of money.

What makes Nao, Emiew, and any robot – humanoid or otherwise – expensive is the amount of work that goes into its development.

There are many challenges in terms of hardware, software and connectivity, not to mention the development of customised features and functionalities for specific environments and applications.

That’s not even considering the complexity of the manufacturing process, whether it’s small batch or mass production.

But they are challenges Hitachi clearly thinks are worth meeting.

A robot of the people

In an exclusive interview with Robotics and Automation News, Dong Li, research engineer at Hitachi R&D, outlined the company’s objectives in developing Emiew, its potential applications, business use cases, and the company’s research and development goals.

“My first degree was in robotics,” says Li. “But robotics is actually quite a wide area. My interest is more in the software side.”

This is the third generation of the Emiew robot, and it’s already found employment at Haneda airport, in Japan, and Li says the company is looking at other transport hubs, especially since it supplies trains, ships and other equipment which routinely pass through those hubs.

“Emiew is a humanoid robot,” says Li. “It’s designed to provide customer service – that’s its main purpose.

“It travels quite steadily, and reasonably fast. It has reasonably very good ears. It has a microphone array which can detect human speech, even in a noisy environment.

“And, in our opinion, it’s a great industrial design.”

But why is Hitachi even interested in building robots?

Li says: “Hitachi’s research and development has the ambition to create a society in which a human can live in harmony with robots, an environment in which robots can provide help to humans.

“So that’s our vision of future society.

“Based on that vision, we have done a lot of work, and developed this robot.

“I think that is our main motivation to carry out work in this area.”

Is Hitachi developing other robots?

“In R&D, as far as I know, Emiew is the main robot we have developed, and it has evolved from Emiew generation one to, now, generation three.

“So, people have put in effort continuously to improve it.”

Lost in translation

Emiew 3 is capable of complex manoeuvres effortlessly. It gets around the Hitachi R&D centre with ease, turning left and right, moving forwards and backwards without any apparent hesitation or indecision.

But the main function of the robot is expected to be talking to customers, interacting with people who want to ask it questions and who need to hear accurate answers back.

This is actually a very tough programming challenge, as all the big tech giants have found out – whether it’s Apple, Google, Amazon or others. All of them are developing computers that can communicate with users using voice.

This voice-based computing is often called “natural language processing”, and although examples such as Apple’s Siri, Google’s speech to text, and Amazon’s Alexa are very impressive in the way they can understand and process what a human says, they still have a long way to go before they can engage in conversation as adeptly as Emiew moves around.

I’ve used Google’s speech-to-text and it works really well. I’m not accustomed to working that way, but if I could adjust, it would probably save me a lot of typing.

But in order for me – and maybe many others – to use our voices to interact with a computer, their natural language processing has to be perfect, or at least pass a certain threshold where its imperfections are negligible.

This is a threshold many companies are racing each other to try and cross. None have quite yet done it yet, apparently, probably because it’s a very difficult technical issue – one that might require further improvements in the hardware as well as software.

But how is Hitachi doing in this race?

“It’s difficult to do benchmarking,” says Li. “We have a 14-microphone array which cancels background noise very well. So, in terms of catching what a human says, I think our robot has some advantages.

“I think both [hardware and software] are very important.

“Emiew has some advantages in picking up sound because of the hardware side. Both are very important to make the conversation work.”

Moore’s law of comprehension

Most people would probably accept that both the hardware and software problems posed by natural language processing will eventually be resolved, and computers and robots will be able to understand the average human speaker just as well as another average human would – perhaps even better.

At the point when that happens, it’s easy to imagine how quickly the market will grow for such technology. For one thing, no one would have to type again, and keyboards will largely become a thing of the past.

And, like many other companies, Hitachi is exploring the possibilities of such new technologies and preparing for a future world which can almost be seen.

“Hitachi R&D has developed this robot – hardware and software,” says Li. “Our main capability is still in Japan. These three Emiews arrived here, in London, in April this year.

“Here, in Hitachi R&D Europe, we want to explore possible business cases with potential customers.

“Through these discussions there may be new opportunities identified, so perhaps there is an opportunity to create a new business for Hitachi, so that is an important role for Emiew here.

“And as a researcher, myself and my team are working on making Emiew work and developing new technologies in a few different areas.

“As you can imagine, in Japan, we are looking into some areas, and me and my colleagues here are looking into a few areas as well.

“For example, this year, I am working on improving its conversational skills. And my colleague is creating a virtual Emiew, so people can people can put on goggles and see Emiew in virtual reality, and interact with it without having the real robot, in order to understand how the robot works.

“We develop that from here [in Hitachi Europe R&D] as well.”

But what is the commercial potential now for robots such as Emiew now?

“In Europe we are, at this moment, engaging with potential customers,” says Li. “So the purpose is to find potential business cases.

“Of course, airports and railway stations are the areas we already identified.

“In Japan, we have researchers further working out how to get Emiew working better in those areas.

“But here in Europe, we have people trying to find out other areas to further explore.

“Perhaps in the European market there are some differences when compared with the Chinese market, which we can potentially go into.

“That’s from the business perspective.

“From the research perspective, what I do, and what my team does, is that we have to develop functions that can support those business cases that identified. Otherwise the business case cannot be realised.

“From a research point of view, myself and my colleagues are working on areas that has the potential to support the business case.”

All the lonely people

Like many advanced industrial nations, Japan has an ageing population. With younger people often too busy with work and other everyday activities, it can be difficult to find the time to even phone or visit their elderly relatives.

This means that many retired people are spending a lot of time alone. And they might not always be physically and mentally as strong as they used to be when they were younger.

And like many other Japanese companies, Hitachi is looking to capitalise on this market of an increasingly large community of elderly people who might need help around the home and reminders of tasks they need to perform.

“That provides a good a business case for the robots in Japan,” admits Li. “The population is ageing and Japanese people are quite open to robotics technology.

“So we designed the robot in order provide support and help.”

But he accepts that navigating a home environment is a “challenging task” because of the smaller spaces and more complex layout. Office environments and transport hubs tend to have more open spaces, which seems ideal for Emiew.

“Our robot is equipped with lidar [light detection and radar] so it detects the surrounding areas,” says Li.

“We are always working on developing its capability to detect obstacles and to avoid them.

“Obviously that is not very easy, so that is one area we are working on. Of course a home environment has physical limitations.”

Often, tech companies overcome development obstacles by opening up to the developer community.

They tend to – though not always – provide a software development kit so outside companies and individuals can create software which can do specific tasks.

This is probably more common in the computer technology sector – Apple, Google, Amazon and so on – but such opening up to the prevailing open-source or collaborative development culture is relatively new to old industrial companies.

Hitachi may or may not launch an SDK for Emiew in the future, but it’s not completely closed off to the possibility.

“At the moment we have the software all in-house, so we haven’t got an API for outside developers,” says Li.

The main code for Emiew is in Robot Operating System, so Li accepts there is potential for an SDK, but it depends on “if Hitachi finds it suitable, if they see a good business case”.

At the moment, he says, the company is concentrating on the areas in which it has significant experience.

“According to the market research at Hitachi, we identify several areas in which a humanoid robot can have a big market.

“We’ve identified transport hubs as one area where humanoid robots have potential.

“I’m sure other companies have realised similar things.”

Emiew’s ability to speak in multiple languages would probably also lend weight to the argument in favour of an SDK because no matter how big an individual company, even one of Hitachi’s size, it would be difficult to learn all the nuances and subtleties of local accents in the many countries the robot may end up operating in, not to mention individual speakers’ particular styles.

But transport hubs are a good starting place for Emiew to learn more and show off what it can do, says the company.

The idea is that when Emiew is spoken to in a particular language, its brain can learn to compute that language and respond appropriately, says Hitachi.

Robots from hell

Japan is famous for building humanoid robots.

One Japanese roboticist – Professor Hiroshi Ishiguro, of Osaka University’s Intelligent Robotics Laboratory – has developed humanoid robots so life-like that the whole world is still totally terrified by them years after they first emerged.

Well, maybe not terrified, and maybe not the whole world, but the robots are definitely disturbingly realistic.

Professor Ishiguro even made one robot in his own image (picture above), which was weird on a level that has yet to be understood or adequately articulated.

Japanese people have always been more open and less fearful of robots than people in other countries, but even they find some of these new humanoid robots creepy.

And the differences between humanoids like Emiew and the diabolical ones Professor Ishiguro has apparently harvested from the very depths of hell as part of some dastardly deal with the devil could be said to be mostly superficial.

Apart from actuation – movements – and appearances, at root, they are both computers running artificial intelligence programmes. And when the natural language processing problem is solved, they’ll presumably both be as scary as each other.

Scared of its own shadow

With Professor Ishiguro’s nightmare-inducing humanoids involuntarily in mind, what is the future for Emiew and Hitachi?

“It’s a big question,” says Li. “The goal is to make robots which help humans.

“Transport hubs – airports and railway stations – is one area, home use is another potential area, especially where there may be people who are lonely, who are elderly, and they may need some reminders, and they may need someone to talk with, or they may need a friendly interface to communicate with, and to connect them to the outer world.

“I would say humanoid robots, because of its design, is relatively easier to make a connection with, for a human.

“So home care is another potential market.

“Also, at the moment, the functionality of humanoid robots are limited [compared to a human], but currently there are still many companies who want to have a fleet of robots because they want to improve their company image, to make people think, ‘This company is embracing new technology’.

“To give a more specific example, in the railway stations, the trains are sometimes delayed, they don’t always follow the schedule.

“At the moment, if I’m a passenger, I could approach a member of staff who may know what is going on, and they may be able to give me an idea of when the train will arrive.

“But a humanoid robot can be connected to a background computer system which has the schedule, so they have potential to provide more up-to-date information than a human and give more optimised advice.”

One solution to the audio processing limitations, which may or may not be part of the natural language processing conundrum, could lie in providing a way to communicate with the robot by text message.

Text messaging could be helpful to someone who speaks in a way that’s difficult for others to understand.

“Yes, that’s theoretically possible,” says Li. “At this point, Emiew has no interface for that. But the technology – generally speaking – with these robots have several stages to transfer a voice into an understandable command.

“The first step is speech to text. The second step is to change the text into a meaningful thing that the robot can understand.

“So, what you describe, the scenario in which the voice application is not able to understand you, so the voice-to-text translation may not be working well enough.

“But yes, there is a second stage, so you can enter text directly into the second-stage processing, which translates the text into something the robot can understand.

“We have our own software for the first stage, where it translates – or, more appropriately transcribes – the human voice into text.

“The second stage – although I wasn’t working on it myself – I heard is from a software company in Japan.”

Like most other machines – computers, robots, software, whatever – which try and understand human speech, Emiew has its limitations.

Humans who mumble or speak in unusual accents or styles might find it difficult to communicate – or interface – with such machines, but the computer industry and the developers of the necessary artificial intelligence are making progress.

Moreover, Google, Apple and Amazon and many others have opened up their natural language processing systems – which usually involve massive computing or neural networks in the background – to outside companies, like Hitachi, to use in their devices.

But they’re not “plug and play” or “turnkey” solutions, no matter how simple those companies might make their application programming interfaces or SDKs sound.

Computing, programming, robotics and AI is still very challenging. Otherwise we’d all be building robots like Emiew and making $100 million like SoftBank has done by selling 10,000 Nao robots.

“I think that [natural language processing] is the difficult part, so yes, it is the area that companies competing with each other to make better, and it’s something we’re doing as well,” says Li.